2022 Internet Law Year-in-Review

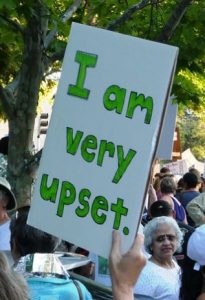

Three dynamics combined to make 2022 a brutal year for Internet Law.

First, the techlash is taking its toll. There is widespread belief that the major incumbents are too big, too rich, and too capricious to avoid pervasive government control. This reflects years of tech-trashing by the media and politicians–and an imbalanced discourse because there’s surprisingly little counternarrative to the techlash narrative (I hope to address this with my forthcoming book project).

Second, the Internet has become a partisan football. Both parties see political gold in attacking the Internet. In 2022, this partisan calculus prompted a steady stream of poorly conceived messaging bills. The Republicans have made the techlash the centerpiece of their policy platform; meanwhile, some of the most anti-Internet laws enacted in 2022 came from “blue” states.

Third, 2022 was an election year, so politicians on all sides pretended they cared about “protecting kids online.” There are important conversations to be had about the benefits and concerns of children using the Internet, but the child safety advocates won’t entertain any data that doesn’t support their preconceptions. So regulatory efforts under the “protect kids online” banner are routinely performative and pernicious.

As bad as 2022 was, 2023 will be worse. The threats are everywhere: Congress, state legislatures, the Supreme Court, internationally. Given the developments of 2022 and the past decade, we’re already witnessing the collapse of Web 2.0, and 2023 could officially mark the conclusion of that era. If you are wondering what happens after that, consider this.

* * *

Because it’s all going south, this year I present an unranked list of this year’s worst developments for Internet Law:

Musk Buys Twitter. Musk is a one-man wrecking ball for Internet Law. Some of the problems he’s creating:

- Not paying outside counsel. You need top talent to win hard cases. At minimum, Twitter has to win its pending lawsuits, like Taamneh v. Twitter and Trump v. Twitter. Without adequate financial support for outside counsel, those cases could go sideways.

- Gutting his internal counsel department. Without enough bodies, corners will be cut–especially with compliance obligations, as the FTC has already warned.

- Twitter Files disclosures. Musk keeps disclosing materials that plaintiffs will eagerly cite against Twitter in future lawsuits. It’s amazing Musk is oblivious to this diminution of his asset’s value or doesn’t care.

- Actualizing the fears that Internet services don’t take CSAM seriously. Twitter is already being sued for mismanaging CSAM. Musk literally came out and publicly said that Twitter (under prior management) didn’t do a good job of combating CSAM. Plaintiffs will be citing that against Twitter too. Plus, Musk has gutted Twitter’s anti-CSAM capacity, so whatever problem he saw from the past is only going to get worse.

- Actualizing the fears of anti-competitive content moderation. Internet services have always had the THEORETICAL capacity to moderate content to hurt rivals, but that was a red line that most players in the industry never approached. Then, Musk brazenly and casually blocked links to his competitors BY NAME.

- Actualizing the fears of a capricious and thin-skinned Internet publisher calling content moderation shots. Internet services have always had the THEORETICAL capacity for their top brass to dictate individual content moderation decisions, but most executives want no part of this. In contrast, Musk has enthusiastically seized the power of moderating content to his own personal benefit (such as his ill-advised attempt to shut down information about ElonJet).

- Actualizing the fears that Internet services will target journalists. As part of Musk’s ElonJet crackdown, he blocked journalists for reporting on the story.

If Musk wants to light his investors’ $44B on fire, that’s between him and them. He who has the gold makes the rules; a fool and his money are soon parted; etc. However, many of Musk’s own-goals will affect all Internet services, not just Twitter. If Twitter botches one of its lawsuits, that creates adverse precedent for the industry. Plaintiffs will use Musk’s disclosures to survive motions to dismiss in Twitter cases; and other plaintiffs will cite it as evidence of what Internet services are doing. Regulators will use Twitter’s vulnerabilities to set rules for the entire industry. Basically, Musk’s mistakes pour gasoline on the regulatory fires for everyone. And if Musk ever flips any of Twitter’s long-standing legal or policy positions in litigation or lobbying, he could truly melt down the industry.

Collectively, Musk’s moves breed greater distrust about the content moderation practices among all regulators and consumers, which hurts the industry by providing more fuel for the techlash.

Henderson v. Source for Public Data. This was always a tough case involving possible conflicts between Section 230 and the Fair Credit Reporting Act. Navigating those conflicts needed care and nuance. Instead, a Trump-appointed Federalist Society judge chose to blow it all up. Going far beyond what it needed to address, the opinion redefined both the “publisher/speaker” and “another information content provider” language in the statute, opening up multiple new angles for plaintiffs to attack Section 230. In the process, the panel seemingly partially overturned the seminal Zeran v. AOL case, which helped define the Web 2.0 revolution. For now, other courts are acknowledging how unpersuasive this opinion is. Will that last?

Are Search Engine Common Carriers? No, of course they aren’t. That’s like asking what 19th Century railroads and modern-day keyword-driven algorithmic search engines have in common with each other. (Spoiler: nothing). And yet…a #MAGA Ohio judge sealioned his rejection of Google’s motion to dismiss.

Europe. It’s impossible for me to keep up with all of Europe’s efforts to destroy the Internet. Two recent key developments were the Digital Markets Act and the Digital Services Act. They impose heavy compliance costs and are imbued with pervasive government censorship, so they ensure that the European UGC industry will consolidate even more and will become even less hospitable to new homegrown Internet startups.

CA AB 587. I have explained in detail how mandatory editorial transparency laws enable government censorship, both by prospectively channeling publisher behavior and through post hoc enforcement threats. (It’s one of the many ways the DSA is a censorship law). On the heels of the mandatory editorial transparency provisions in Florida and Texas’ social media censorship laws, the California legislature thought it could one-up those states by passing a law with at least 161 different disclosure requirements. #MCGA.

[Honorable mention: New York’s AB A7865A requiring editorial disclosures about “hateful conduct.” A constitutional challenge has been filed.]

NetChoice v. Paxton (5th Circuit opinion). Another #MAGA opinion from a Trump-appointed Federalist Society judge, and a full-throated work of Justice Thomas fandom. The opinion upheld every aspect of Texas’ social media censorship law. If this opinion is correct, the First Amendment protections for “free speech” and “free press” are functionally dead.

California AADC (AB 2273). (I blogged the AADC several times. Maybe start with this post). I didn’t rank this year’s list, but if I had, the AADC would easily rank as the biggest threat to the Internet. To comply with the law, most websites will need to validate users’ ages before letting them in. This checkpoint-driven approach destroys the seamless web, where we’re used to clicking back-and-forth freely. It also necessitates that users must reveal highly sensitive personal information before they can access a service. This discourages visits to new sites, which will reward incumbents and thwart new market entrants. It also puts users’ privacy and security (including minors’!) at greater risk. Finally, it trains people (especially minors) that revealing sensitive personal information is a normal thing to do before being allowed to read content or engage with vendors, and this socialization (and the associated technological infrastructure) will be a boon for future government control of its citizens’ information consumption and behavior.

The point of the age verification is to give minors extra protection from a website’s practices. However, the law made it too legally risky to provide that special treatment, so rather than jump through those regulatory hoops, websites will simply close their doors to minor users. This has all kinds of downsides, including: (1) hindering minors’ ability to use the Internet to improve their education or express themselves; and (2) slowing down minors’ learning curve of how to navigate and use the Internet, even though such digital skills will be crucial to their personal and professional success for the rest of their lives.

In total, the AADC launches a bunch of huge and risky social experiments, none of which have been validated to be good ideas or to help the kids, all under the false pretense of “protecting kids.” History will harshly judge anyone who supported this law, and California constituents will be furious about this law when they realize its true consequences for them.

In total, the AADC launches a bunch of huge and risky social experiments, none of which have been validated to be good ideas or to help the kids, all under the false pretense of “protecting kids.” History will harshly judge anyone who supported this law, and California constituents will be furious about this law when they realize its true consequences for them.

[Note 1: NetChoice has filed a challenge against the law.]

[Note 2: Louisiana HB 142 requires sites containing 1/3 porn to verify age. This is a baby CDA bill that was deemed unconstitutional 20+ years ago. But will anyone challenge it this time?]

* * *

As terrible as 2022 was, it could have been worse. Here are some of the bills that looked like they were going to get some traction (or be stuffed into a must-pass bill) that didn’t pass in 2022. Many/all of these are coming back in 2023, so the fight has been only paused, not won:

* KOSA. A remix and extension of the CA AADC at the federal level. Everyone who has objected vehemently to KOSA but stayed silent about the AADC needs to rethink their priorities. Defeating KOSA, without eliminating the AADC, is a hollow victory.

* SHOP SAFE. A bill that sought to accelerate the demise of online marketplaces by creating a new and unmanageable species of contributory trademark liability. While SHOP SAFE would have quickly wiped out the online marketplace niche, Congress still did plenty of harm to that niche in the disclosure-oriented INFORM Act (which, among other problems, botches the preemption clause, so it doesn’t even provide a single national standard for compliance).

* CA AB 2408. A bill that would create a new and unmanageable liability for teens becoming “addicted” to social media. This would have necessitated social media services kicking off teens, but the CA AADC partially moots the need for this bill because it will force social media services to toss all kids overboard anyway. Meanwhile, plaintiffs have brought an MDL over social media addiction, so this issue may resolve in the courts without any new legislation.

* Various antitrust bills that sought to regulate content moderation, including AICOA and OAMA. Sen. Klobuchar cared more about “winning” than about making good policy, so she struck a deal with Republicans to embrace government censorship of content moderation to get their votes. This cross-partisan deal caused her to lose the Democrats’ votes because everyone was wondering why she was so keen to advance #MAGA censorship of the Internet. Ironically, if she had abandoned her #MAGA content moderation fixation, she might have actually been able to get a win on other provisions.

* JCPA (Journalism Competition and Preservation Act). This bill would have created a link tax that would have primarily benefited vulture fund buyer-looters of print newspapers and partisanized newsy opinion sites like Breitbart. Given the scarcity of winners it would create at the expense of everyone else, and given how link taxes have failed across the globe, it’s amazing anyone was willing to publicly back this garbage.

* SMART Copyright Act. A very dumb law that would authorize the Copyright Office to force UGC sites to adopt expensive and overrestrictive technological controls as dictated by copyright owners.

* EARN IT Act. This bill started as a twofer: ban E2E encryption AND repeal Section 230 for CSAM claims. Censorship-minded advocates won’t stop until they achieve both goals.

* * *

Some other developments of note in 2022:

Government Use of Social Media. After the Knight Institute v. Trump decision, it looked like government officials simply couldn’t block constituents on social media. Since then, instead, courts have developed baroque distinctions between legitimate and unconstitutional content moderation by the government. Compare the Ninth Circuit (Garnier v. O’Connor Ratcliff) with the Sixth Circuit (Lindke v. Freed).

hiQ v. LinkedIn. The long-running hiQ v. LinkedIn case reached a denouement that left virtually every key legal question unresolved. Still, the news was bad for hiQ. hiQ went out of business, lost a ruling that it potentially breached LinkedIn’s TOS, and settled with a complete victory for LinkedIn–including a cash payment to LinkedIn (though I bet no cash will actually move), an injunction against scraping, and a requirement to dump the scraped data. So what do we make of the earlier rulings that suggested hiQ had a legally protected right to scrape? ¯\_(ツ)_/¯

CCB Launches We have a new venue for copyright litigation. Yay? The early returns have been underwhelming.

Jawboning Cases Keep Failing. Many plaintiffs, most of them #MAGA, have claimed that the Internet services blocked them at the government’s instructions. These cases have universally failed, including a complete dismissal by the Ninth Circuit (Doe v. Google).

Amending TOSes. It’s a simple Q: if a service has properly formed a TOS, how can the TOS be amended? We’ve always known the only sure-fire way was another clickthrough, but for years there has been caselaw suggesting that some lesser amendment protocol could work. Those days may be over. Want to amend a TOS? You probably need a click. (1, 2).

Can 512(f) Claims Succeed? The answer remains “probably not,” but this year saw a rare appellate ruling for a 512(f) plaintiff.

Does Section 230(c)(2)(A) Really Matter? After its ridiculous drama with Domen v. Vimeo, the Second Circuit finally found a Section 230(c)(2)(A) defense it could actually stick with. Will that matter after the Supreme Court’s Gonzalez ruling?

Do Social Media Services Make Editorial Decisions? One court put it plainly:

Like a newspaper or a news network, Twitter makes decisions about what content to include, exclude, moderate, filter, label, restrict, or promote, and those decisions are protected by the First Amendment

I don’t understand how this is remotely debatable.

The Changing Competitive Set of Social Media Services. In 2022, a few competitive dynamics of note:

- Facebook’s stock price dropped substantially, below the financial thresholds set in Klobuchar’s antitrust bills. Oops.

- TikTok is dominating GenZ, which portends an inevitable erosion of the customer bases for Facebook, Instagram, and Snap.

- Musk bought Twitter, changing its competitive posture (more #MAGA, fewer journalists), decreasing its advertiser base, and otherwise causing Twitter’s implosion.

Apple. A few big developments in the Apple world: it dropped its pernicious plans to do client-side scanning for CSAM, and it will open up its platform to allow apps to be uploaded from places other than its app store.

Previous year-in-review lists from 2021, 2020, 2019, 2018, 2017, 2016, 2015, 2014, 2013, 2012, 2011, 2010, 2009, 2008, 2007, and 2006. Before that, John Ottaviani and I assembled lists of top Internet IP cases for 2005, 2004 and 2003.

* * *

My publications in 2022:

Advertising Law: Cases and Materials (with Rebecca Tushnet), 6th edition (2022)

Internet Law: Cases & Materials (2022 edition)

The Constitutionality of Mandating Editorial Transparency, 73 Hastings L.J. 1203 (2022)

How Santa Clara Law’s “Tech Edge JD” Program Improves the School’s Admissions Yield, Diversity, & Employment Outcomes (in process), __ Marq. IP L. Rev. __ (forthcoming 2023) (with Laura Lee Norris). See also Successful Outcomes From Santa Clara Law’s Tech Edge JD Experiment, Holloran Center Professional Identity Implementation Blog, Oct. 18, 2022 (with Laura Lee Norris)

Assuming Good Faith Online, 30 Catholic U.J.L. & Tech. __ (forthcoming 2023)

Zauderer and Compelled Editorial Transparency, 108 Iowa L. Rev. Online __ (forthcoming 2023)

The Plan to Blow Up the Internet, Ostensibly to Protect Kids Online, Capitol Weekly, August 18, 2022

The Story Behind the Lessons from the First Internet Ages Project (with Mary Anne Franks), Knight Foundation, June 2022

Reflections on Lessons from the First Internet Ages, Knight Foundation, June 2022

How Fair Use Helps Bloggers Publish Their Research, Association of Research Libraries blog, Feb. 18, 2022

NetChoice LLC v. Moody, amicus brief in support of the petition for certiorari, November 2022

Comments to the CPPA’s Proposed Regulations Pursuant to the Consumer Privacy Rights Act of 2020, California Privacy Protection Agency, August 2022

NetChoice LLC v. Paxton, amicus brief in support of emergency application for administrative relief in the U.S. Supreme Court, May 2022

Letter to Congress opposing the SHOP SAFE Act on behalf of 26 trademark academics, March 2022

Pingback: Links for Week of January 27, 2023 – Cyberlaw Central()