Ninth Circuit Strikes Down Key Part of the CA Age-Appropriate Design Code (the Rest is TBD)–NetChoice v. Bonta

The California Age-Appropriate Design Code (AADC) is a “think of the kids” law that nominally purports to protect kids’ privacy. However, as I will explain in my forthcoming Segregate-and-Suppress article, it hurts children and advances censorship…so it’s just bad policy–and unconstitutional, too (as the legislature was repeatedly warned).

On Friday, the Ninth Circuit declared that the AADC’s requirement that businesses conduct impact assessments before changing their websites is unconstitutional. This decision knocks out many other provisions of the AADC that are linked to the impact assessment, gutting key parts of the law. Most critically, the court recognized how preparing impact assessments was “implicit censorship,” despite the many pretextual efforts to normalize that censorship by couching it as a child safety/privacy law that only regulated businesses’ conduct. The court correctly saw through those distortions.

Consistent with the Moody decision from last month, the court remands the remaining provisions of the AADC back to the district court to redo its facial analysis. I believe many of the remaining provisions will be once again declared unconstitutional due to the AADC’s predicate requirement that businesses age-authenticate their users, something that the 9th Circuit barely acknowledged but that casts a long shadow over the constitutionality of the entire law.

Because much of the law failed now and the rest should fail again soon enough, I think this opinion is a major win for free speech and for Internet users. Further, I think the opinion throws a lot of shade on other laws that require businesses to conduct impact or risks assessments, a currently trendy pro-censorship regulatory tool in privacy and AI regulations. If this decision stands up after the inevitable SCOTUS appeal, this ruling will help invalidate many misguided attempts to regulate the Internet (and AI too).

The DPIA Requirement

Facial Challenge

The AADC requires many websites to prepare Data Protection Impact Assessment (DPIA) reports “identifying, for each offered online service, product, or feature likely to be accessed by children, any risk of ‘material detriment to children that arise from the data management practices of the business'” and to take steps to mitigate or eliminate those risks.

There are many good reasons why businesses don’t want to prepare these reports, including the preparation costs, the bureaucracy and delays slow down product iterations, and the odds that enforcers will use the reports to show either that the business knew there was a risk and didn’t adequately mitigate it or didn’t know there was a risk because they didn’t prepare the DPIA properly. (In the AADC’s case, the AG just needs to ask the business to hand over the DPIA reports–no reasonable suspicion, probable cause, or subpoenas required). The court calls the DPIA requirement the AADC’s “chief” mandate because the DPIA necessarily forms the foundation of many AG enforcements of the AADC.

The court says that it can proceed with a facial challenge to the DPIA requirement despite Moody because the obligation affects all regulated entities:

Whether it be NetChoice’s members or other covered businesses providing online services likely to be accessed by children, all of them are under the same statutory obligation to opine on and mitigate the risk that children may be exposed to harmful or potentially harmful content, contact, or conduct online…in every circumstance in which a covered business creates a DPIA report for a particular service, the business must ask whether the new service may lead to children viewing or receiving harmful or potentially harmful materials

Is the DPIA Requirement a Content Regulation?

The court says the DPIA requirement triggers First Amendment scrutiny because (1) “the DPIA report requirement clearly compels speech by requiring covered businesses to opine on potential harm to children,” and (2) “the forced disclosure of information, even purely commercial information, triggers First Amendment scrutiny” (cite to Zauderer). It doesn’t matter that the AG is the only audience (I mention this point in my Mandating Editorial Transparency paper).

The court summarizes:

The primary effect of the DPIA provision is to compel speech…The State cannot insulate a specific provision of law from a facial challenge under the First Amendment by bundling it with other, separate provisions that do not implicate the First Amendment

The court also says that the DPIA requirement “deputizes covered businesses into serving as censors for the State” because the DPIA risk “factors require consideration of content or proxies for content.” That’s because “a business cannot assess the likelihood that a child will be exposed to harmful or potentially harmful materials on its platform without first determining what constitutes harmful or potentially harmful material.”

Scrutiny Level

[T]here is no question that strict scrutiny, as opposed to mere commercial speech scrutiny, governs our review of the DPIA report requirement…businesses covered by the CAADCA must opine on potential speech-based harms to children, including harms resulting from the speech of third parties, disconnected from any economic transaction….it not only requires businesses to identify harmful or potentially harmful content but also requires businesses to take steps to protect children from such content….We therefore conclude that the subjective opinions compelled by the CAADCA are best classified as noncommercial speech.

Note this assumes that businesses have the capacity to engage in non-commercial speech, even though the Supreme Court keeps suggesting that all corporate speech is commercial speech.

The court distinguishes the CCPA’s compelled disclosures, which include “the number of requests to delete, to correct, and to know consumers’ personal information, as well as the number of requests from consumers to opt out of the sale and sharing of their information.” The court says:

That obligation to collect, retain, and disclose purely factual information about the number of privacy-related requests is a far cry from the CAADCA’s vague and onerous requirement that covered businesses opine on whether their services risk “material detriment to children” with a particular focus on whether they may result in children witnessing harmful or potentially harmful content online. A DPIA report requirement that compels businesses to measure and disclose to the government certain types of risks potentially created by their services might not create a problem. The problem here is that the risk that businesses must measure and disclose to the government is the risk that children will be exposed to disfavored speech online.

So, the court suggests that the CCPA mandatory disclosures might benefit from Zauderer review because they involve “purely factual information.” The Zauderer test has more preconditions that the court ignores, but I guess this is what passes for Zauderer “analysis” nowadays. 🙄

So, the court suggests that the CCPA mandatory disclosures might benefit from Zauderer review because they involve “purely factual information.” The Zauderer test has more preconditions that the court ignores, but I guess this is what passes for Zauderer “analysis” nowadays. 🙄

(Also, no one (except perhaps the CPPA/AG) appears to pay attention to or care about the CCPA’s mandatory disclosures, so chalk that up as another “successful” feature of the CCPA/CPRA 🙄).

Scrutiny Analysis

The DPIA unsurprisingly fails strict scrutiny. The court says it’s not the least restrictive means to protect kids. Other options include “(1) incentivizing companies to offer voluntary content filters or application blockers, (2) educating children and parents on the importance of using such tools, and (3) relying on existing criminal laws that prohibit related unlawful conduct.” The court says another approach would be pass laws that dictate website design or impose data management practices that apply equally to kids and adults. (To be clear, some of those laws might also be unconstitutional).

The AG pointed out that businesses doing the DPIA-required self-reflection would prompt them to do more to protect kids. The court responds that “the relevant provisions are worded at such a high level of generality that they provide little help to businesses in identifying which of those practices or designs may actually harm children. Nor does the presence of these factors overcome the fact that most of the factors the State requires businesses to assess in their DPIA reports compel them to guard against the risk that children may come across potentially harmful content while using their services, which is hardly evidence of narrow tailoring.”

Note that much of the AADC is written at a “high level of generality” due to its UK origins. In Europe, vague language with substantial prosecutorial discretion is a feature, not a bug. In the US, where the First Amendment controls, not so much.

The court summarizes:

the State attempts to indirectly censor the material available to children online, by delegating the controversial question of what content may “harm to children” to the companies themselves, thereby raising further questions about the onerous DPIA report requirement’s efficacy in achieving its goals. And while the State may be correct the DPIA reports’ confidentiality reflect a degree of narrow tailoring by minimizing the burden of forcing businesses to speak on controversial issues, that feature may also cut against the DPIA report requirement’s effectiveness at informing the greater public about how covered businesses use and exploit children’s data.

It’s a major achievement for the court to recognize how the AADC “implicitly” censors the Internet. Kudos to the NetChoice lawyers and amici for helping the judges grasp this point.

The Rest of the Law

The court says that any AADC requirement dependent on the DPIA is problematic. However, the court says the AADC’s remaining parts “by their plain language, do not necessarily impact protected speech in all or even most applications.” (The court also says parts of the district court’s analysis read like as-applied analysis, not facial analysis).

For example, the court says “it is unclear whether a ‘dark pattern’ itself constitutes protected speech and whether a ban on using ‘dark patterns’ should always trigger First Amendment scrutiny,” and it might be a content-neutral restriction. Uh…the situation is a little more complicated:

First, the term “dark patterns” is trash jargon. It doesn’t belong in anyone’s vernacular, let alone a statute.

Second, the “dark patterns” concept is vague and superfluous. It attempts to establish a third category of speech that is neither “misleading” and “non-misleading.” However, if “dark patterns” is coextensive with “misleading” speech, it overlaps existing regulations and adds nothing to the conversation. Otherwise, if it reaches non-misleading speech, then it’s got constitutional issues.

Third, and most significantly, the court hypothesizes about the legitimacy of the dark patterns restriction without noting that it governs interactions with children. But businesses won’t know how to comply with the dark patterns restriction for children unless they establish which users are children and which are adults, which raises the problems with the AADC’s mandatory age authentication that the court sidestepped.

The court also discusses a requirement to disclose various policies in plain language. The court says this compels speech but in “many circumstances, all or most of the speech compelled by this provision is likely to be purely factual and non-controversial.” Another bastardized Zauderer analysis. The court is 100% wrong that disclosing corporate policies is “purely factual and non-controversial” (indeed, the Zauderer ruling itself involved the disclosure of only a single corporate policy, and even that was contentious). The court also ignores that the requirement applies to plain language for kids, which again raises the age authentication issue.

Severability

Provisions dependent on the DPIA aren’t severable. However, the court says other parts may be. For example, the AADC’s Children’s Data Protection Working Group might be severable from the rest of the law. OK…though what purpose would that group serve if the rest of the law is enjoined?

Implications

What’s Next? This case will get appealed to the Supreme Court eventually. The question is whether it get appealed now or if the case goes back to the district court first to redo the review of the non-DPIA provisions.

The panel lifted the injunction against the non-DPIA-linked parts of the AADC. I assume that NetChoice will want to quickly put those injunctions back in place if the AG doesn’t agree to forebear. Either way, any AG enforcement during the pendency of this litigation would be highly inadvisable due to the obvious problems with the law; and the enforcement would ensure standing for an as-applied challenge.

Age Authentication at SCOTUS. Last month, the Supreme Court granted cert in Free Speech Coalition v. Paxton, so the constitutionality of online age authentication requirements is back on the Supreme Court docket. Within 11 months, the Supreme Court will provide additional context on a crucial provision of the AADC. If I were the district court handling this case on remand, I would want to wait for the Supreme Court’s opinion.

Privacy as Censorship. As this ruling shows, the California legislature–like many others around the country–pretextually claimed to protect kids and privacy as a way to normalize or disguise its censorship. Many other “privacy” laws raise similar First Amendment issues. You can’t be a US privacy policymaker if you don’t have substantial expertise in Conlaw.

“Throw Everything at the Wall” Censorship. The AADC, like the Florida and Texas social media censorship laws, was a complex and multi-faceted package of bad policy ideas that, post-Moody, may be too overwhelming to support facial constitutional challenges. Fortunately, this panel doesn’t let the legislature off the hook so easily.

Nevertheless, Moody gives legislatures more incentives to pass “everything but the kitchen sink” censorship to hinder facial reviews–an unfortunate legal consequence that legislatures will eagerly weaponize. The court expressly calls this out, saying legislatures cannot “insulate a specific provision of law from a facial challenge under the First Amendment by bundling it with other, separate provisions that do not implicate the First Amendment.”

Implications for Impact Assessments Generally. The court said the DPIA requirement “clearly compels speech by requiring covered businesses to opine on potential harm to children.” But rendering opinions about content is an intrinsic consequence of many compelled “risk assessment” or “impact assessment” when applied to content publishers like website operators. Thus, all such mandates may be subject to the same constitutional critique.

That’s an extraordinarily powerful ruling. Mandatory risk/impact assessments have become a preferred policy tool of censorship-minded regulators around the globe, especially when it advances censorial outcomes without expressly showing its censorship. (Think of all of the laws saying that businesses must take steps proportionate to the risks–an easy way to create liability that sounds innocuous but is censorial when applied to content publishers). Those requirements prompt businesses to aggressively self-censor rather than expose themselves to unmanageable risks of liability.

This ruling puts those mandates in peril, at least in the U.S. This should shake up the privacy community, where doing DPIAs has become a major (and expensive) compliance practice due to the GDPR. It also has important implications for the efforts to regulate generative AI. Indeed, California is on the cusp of enacting SB 1047, which is built around mandating risk assessments for AI. This opinion highlights how those obligations are really just censorship of AI that may not survive constitutional scrutiny.

European Regulatory Models Don’t Work in the U.S. The CA AADC was modeled on the UK AADC. The court shows how the transposition doesn’t work when the First Amendment applies. Other landmark EU laws, like the GDPR and DSA, similarly may not be extensible to the US because they contain provisions that can’t survive First Amendment scrutiny. This is another reason why I expect US Internet Law to structurally diverge from global Internet Law.

Zauderer is a Mess. Lower courts are mangling their Zauderer discussions. They are forgetting prerequisites and cutting corners on the (already minimal) review standards. The Supreme Court encourages such bad behavior through its own mischaracterizations of Zauderer. The Supreme Court needs to comprehensively revisit Zauderer and mitigate the mess it created.

Case Citation: NetChoice, LLC v. Bonta, 2024 WL 3838423 (9th Cir. Aug. 16, 2024)

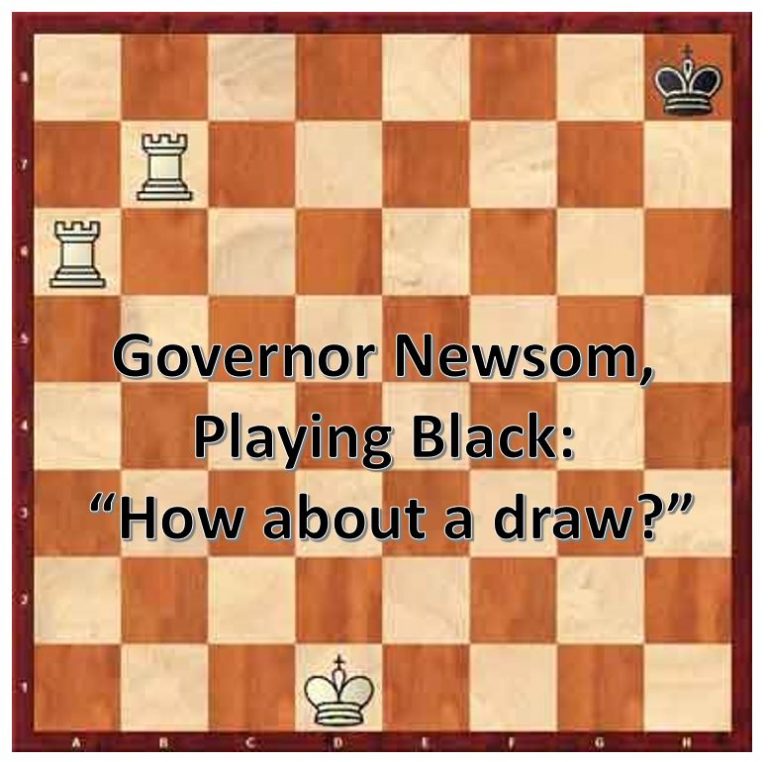

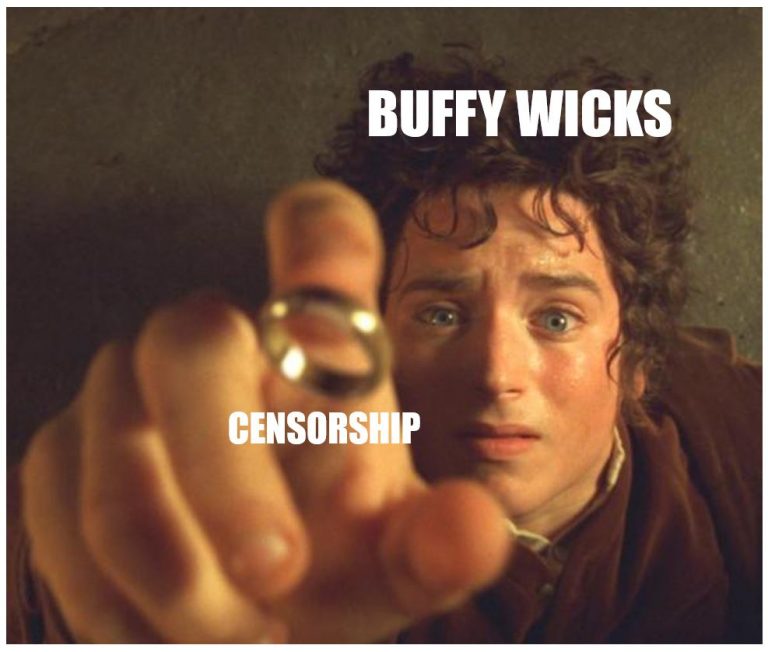

Memes

Some memes about the AADC that are worth resharing:

(Buffy Wicks was the legislative sponsor of the AADC).

(Baroness Kidron is British nobility who helped persuade the California legislature to model the CA AADC off the UK AADC).

(Governor Newsom begged NetChoice to withdraw its lawsuit “for the kids”).

(The California legislature patting itself on the back for passing the AADC).

Prior AADC coverage

- Comments on the Ruling Declaring California’s Age-Appropriate Design Code (AADC) Unconstitutional–NetChoice v. Bonta

- Privacy Law Is Devouring Internet Law (and Other Doctrines)…To Everyone’s Detriment

- Minnesota’s Attempt to Copy California’s Constitutionally Defective Age Appropriate Design Code is an Utter Fail (Guest Blog Post)

- Do Mandatory Age Verification Laws Conflict with Biometric Privacy Laws?–Kuklinski v. Binance

- Why I Think California’s Age-Appropriate Design Code (AADC) Is Unconstitutional

- Five Ways That the California Age-Appropriate Design Code (AADC/AB 2273) Is Radical Policy

- Some Memes About California’s Age-Appropriate Design Code (AB 2273)

- An Interview Regarding AB 2273/the California Age-Appropriate Design Code (AADC)

- Op-Ed: The Plan to Blow Up the Internet, Ostensibly to Protect Kids Online (Regarding AB 2273)

- A Short Explainer of How California’s Age-Appropriate Design Code Bill (AB2273) Would Break the Internet

- Will California Eliminate Anonymous Web Browsing? (Comments on CA AB 2273, The Age-Appropriate Design Code Act)

Pingback: When It Comes to Section 230, the Ninth Circuit is a Chaos Agent-Estate of Bride v. YOLO - Technology & Marketing Law Blog()