Comments on the Ruling Declaring California’s Age-Appropriate Design Code (AADC) Unconstitutional–NetChoice v. Bonta

[Sorry it’s take me this long to get this blog post off my desk. I hope it was worth the wait.]

We’ve seen a flood of terrible Internet laws in the past few years, including the California Age-Appropriate Design Code (AADC). The AADC would require many businesses to sort their online visitors into adults and children–necessarily requiring age authentication–so that children can receive heightened statutory protections. Those protections are so onerous that many businesses would blocklist children rather than comply with the requirements—a calamitous outcome for children who need to prepare for their digital-first future.

Fortunately, a federal district judge preliminarily enjoined the AADC as unconstitutional. This opinion was a decisive win for Internet users…and an embarrassing loss for the AADC’s supporters and California legislators who embraced this bad law despite very clear warnings about its unconstitutuionality. Of course, there will be an appeal, so the Internet’s fate remains unsettled.

Regulated expressive conduct

The AADC frames itself a “privacy” law, but that’s always been a gross lie. It is and always has been about content censorship. The AADC requires all regulated content publishers to check their readers’ ages before allowing users to read the publisher’s content (i.e., their website). Those same restrictions also apply to authors; before they can publish content online at third-party services, the services need to check the authors’ ages. When the AADC is properly reframed as imposing barriers to reading and publishing constitutionally protected content, the conditions imposed by the AADC look clearly like speech restrictions. It’s not a close call.

Thus, the court says: “the steps a business would need to take to sufficiently estimate the age of child users would likely prevent both children and adults from accessing certain content. The age estimation and privacy provisions thus appear likely to impede the “availability and use” of information and accordingly to regulate speech.”

That’s true, and the court could have stopped there because the rest of the AADC will fail when the age authentication requirement falls. Instead, the court explains why some other specific provisions are speech restrictions:

- “restrictions on “collecting, selling, sharing, or retaining any personal information” for most purposes limit the “availability and use” of information by certain speakers and for certain purposes and thus regulate protected speech” (cleaned up).

- provisions requiring “businesses to identify and disclose to the government potential risks to minors and to develop a timed plan to mitigate or eliminate the identified risks, regulate the distribution of speech”

- With respect to the requirements that businesses follow their announced editorial policies, “the State has no right to enforce obligations that would essentially press private companies into service as government censors, thus violating the First Amendment by proxy”

You don’t have to buy those additional arguments to agree that the AADC structurally regulates speech.

The court wraps up by criticizing how the AADC clearly targets businesses but not government or non-profit entities. If you really care about the kids, shouldn’t the protections be ubiquitous? Because the AADC adopts the same definition of “business” as the CCPA/CPRA, this ruling highlights that any speech restrictions in those laws remain vulnerable to a constitutional attack.

Level of Scrutiny

Rather than definitively resolve what level of scrutiny applies, the court says the law fails intermediate scrutiny. Given that speech restrictions will rarely qualify for rational-basis scrutiny, the AADC’s failure to survive any form of heightened scrutiny is a pretty good sign that other states won’t be able to draft around this opinion.

Substantial State Interest

As usual, the court credits the state’s interest in protecting children’s privacy and physical/psychological well-being.

Means-Fit

The law falls apart on the means-fit analysis. The court pokes holes in the AADC’s provisions one-by-one.

DPIAs. The AADC requires businesses to write data protection impact assessments of new offerings and provide those reports to the government. The court says the impact assessments don’t require businesses to look at service design configurations or to mitigate any identified risks, so they aren’t likely to advance the state’s child welfare goals. To be fair, the court would likely poke other holes in a revised impact assessment, so I feel like it would have been better for the court to critique the categorical problems with asking content publishers to do impact assessments before rolling out new editorial features than to nitpick the impact assessment’s scope.

Age Authentication. With respect to the AADC’s age assurance requirement, the court says it:

appears not only unlikely to materially alleviate the harm of insufficient data and privacy protections for children, but actually likely to exacerbate the problem by inducing covered businesses to require consumers, including children, to divulge additional personal information…as noted in Professor Goldman’s amicus brief, age estimation is in practice quite similar to age verification, and—unless a company relies on user self-reporting of age, which provides little reliability—generally requires either documentary evidence of age or automated estimation based on facial recognition.

The AADC disingenuously says that if a business doesn’t want to do age estimation, it can just treat adults as if they are children. The court rightly calls this out:

the State does not deny that the end goal of the CAADCA is to reduce the amount of harmful content displayed to children…..the logical conclusion [is] that data and privacy protections intended to shield children from harmful content, if applied to adults, will also shield adults from that same content. That is, if a business chooses not to estimate age but instead to apply broad privacy and data protections to all consumers, it appears that the inevitable effect will be to impermissibly “reduce the adult population … to reading only what is fit for children.

High Privacy Default Settings. The AADC requires businesses to apply a high default privacy setting to children. The court credits evidence that some businesses would toss kids overboard rather than do this.

Follow-Your-Policy Requirement. With respect to the law’s requirement that businesses follow their stated editorial policies, the court says the state failed to show how doing so would improve children’s well-being. Plus, the follow-your-policies requirement wasn’t limited to children or their well-being, so it wasn’t narrowly tailored to the bill’s scope. Instead, “the State here seeks to force covered businesses to exercise their editorial judgment…The State argues that businesses have complete discretion to set whatever policies they wish, and must merely commit to following them. It is that required commitment, however, that flies in the face of a platform’s First Amendment right to choose in any given instance to permit one post but prohibit a substantially similar one.” Publishers always need the freedom to adopt new editorial policies, to make exceptions to existing editorial policies, and to make editorial judgment mistakes without fearing liability. A follow-your-policies requirement unconstitutionally overrides all of these needs.

Restrictions on Harmful Use of Children’s Data. One of the AADC’s restrictions limited harmful uses of children’s data. But the court notes that minors can range from 0-17, and a law is not narrowly tailored if businesses must judge appropriateness on a sliding scale. Plus, the court again credits evidence that businesses would toss kids overboard rather than comply with this requirement.

No Child Profiling. The AADC restricts services’ ability to profile children by default. The court credits evidence that content targeting can help children in vulnerable populations. Furthermore, “the internet may provide children—like any other consumer—with information that may lead to fulfilling new interests that the consumer may not have otherwise thought to search out. The provision at issue appears likely to discard these beneficial aspects of targeted information along with harmful content such as smoking, gambling, alcohol, or extreme weight loss.” The court may want to coordinate with the California appeals court, which just implied that ad targeting could violate the Unruh Act.

The court continues: “what is ‘in the best interest of children’ is not an objective standard but rather a contentious topic of political debate.” Elsewhere, the court adds: “some content that might be considered harmful to one child may be neutral at worst to another. NetChoice has provided evidence that in the face of such uncertainties about the statute’s requirements, the statute may cause covered businesses to deny children access to their platforms or content.”

This is so true. Yet pro-censorship forces routinely gloss over this point when using children as political props. Children have differing and sometimes conflicting informational needs. Any efforts to adopt one-size-fits-all solutions for children disregards this basic fact, with the consequence that the rules actually prioritize some children’s needs (usually, those with majority characteristics) over the needs of other children (usually those with minority characteristics), thus typically deepening digital divides and regressively rewarding existing privileges. Anyone who claims they are acting in the best interest of “children” online almost automatically disqualify themselves as a serious commentator.

Data Transfers. The restrictions on collecting, selling, sharing, and retaining children’s data “would restrict neutral or beneficial content.” Plus, the state admitted that it sought to prevent children from consuming particular content, and the government cannot “shield children from harmful content by enacting greatly overinclusive or underinclusive legislation.” (Just to note: children’s data is already protected by COPPA and the CCPA/CPRA, so it’s not like legislatures have ignored this issue).

The court also says: “the State provides no evidence of a harm to children’s well-being from the use of personal information for multiple purposes.”

“Dark Patterns.” The AADC restricted “dark patterns,” one of the stupidest jargon in Internet Law. (Note also that “dark patterns” are already restricted by the CPRA). I think “dark patterns” are supposed to mean vendors’ efforts to subvert consumer choices, but (1) consent subversion efforts may already be banned by standard consumer protection law, with the need for meaningless jargon, and (2) it is difficult to distinguish “consent subversion” from “ordinary persuasion” that every marketer legitimately engages in. In any case, the court says: “the State has not shown that dark patterns causing children to forego privacy protections constitutes a real harm. Many of the examples of dark patterns cited by the State’s experts—such as making it easier to sign up for a service than to cancel it or creating artificial scarcity by using a countdown timer, or sending users notifications to reengage with a game or auto-advancing users to the next level in a game—are not causally connected to an identified harm.” I’ll add that if they were connected to a harm, I bet they are already covered by other consumer protection laws.

Severability

After gutting the statute, the court says that it cannot be severed. In particular, with respect to the age authentication requirement, the court says it:

is the linchpin of most of the CAADCA’s provisions, which specify various data and privacy protections for children…compliance with the CAADCA’s requirements would appear to generally require age estimation to determine whether each user is in fact under 18 years old. The age estimation provision is thus also not functionally severable from the remainder of the statute

Does This Mean Legislatures Can’t Protect Kids Online?

There is no single regulatory solution that will protect children online without unacceptable collateral costs. As the expression goes, it takes a village to raise a child, and that includes raising them online. We need everyone involved–parents, schools, child safety experts, regulators, and the kids themselves–to talk through the many options and tradeoffs.

Unsurprisingly, regulators haven’t done a lot of this hard work. Instead, they have relied on simplistic regulatory approaches like requiring businesses to sort kids from adults–a tool that remains clearly unconstitutional, as we’ve known since 1997 and as reiterated in this opinion and the recent Texas and Arkansas opinions. Once we take mandatory online age authentication off the policy table, we will clear the way for the much harder and complicated conversations that need to take place. There will be no quick fixes or consequence-free options; and until we move the conversation forward without mandatory online age authentication, there are unlikely to be any wins at all.

A Special Note for the Privacy Advocate Community

I think mandatory online age authentication poses one of the greatest threats to online privacy and security that we currently face. Don’t like how businesses mishandle consumer data? Then don’t require consumers to hand off highly sensitive information. Worry that too many consumers fall for phishing attacks? Then don’t teach consumers that it’s ordinary and natural to give highly sensitive personal information to whomever asks for it, even if they are sketchy. Worry that kids will grow up never knowing privacy? Then don’t teach them that disclosure of highly sensitive personal information is standard practice to navigate the Internet. Worry about government misuse of personal information? Then don’t build a giant infrastructure that will be turned into an extension of the surveillance state.

In other words, if we lose the battle over mandatory online age authentication, then any other regulatory gains on privacy and security will be swamped by the erosion of privacy and security due to mandatory online age authentication. That makes this topic is the #1 battle privacy advocates should be fighting.

AND YET…I mostly continue to mostly hear deafening silence from privacy advocates on this topic. The future of online privacy and security is being decided without much guidance from the privacy community. Worse, I’ve seen a few privacy advocates try to rehabilitate the AADC’s provisions—including the age authentication requirement—which makes me feel gaslighted. If you want to poke holes in this opinion, I understand, but never at the expense of normalizing mandatory online age authentication. This makes me question if the advocates have lost sight of the forest for the trees.

This opinion offers two other insights to the privacy advocate community.

First, privacy laws are often in tension with free speech rights. Thus, any pro-privacy effort that doesn’t do a careful First Amendment vetting isn’t ready for primetime. The AADC supporters chose to regulate in an alternative universe where the First Amendment doesn’t apply. That myopia/ignorance/willful disregard of fundamental constitutional rights did a real disservice to all Californians.

Second, privacy advocates are knowledgeable about many things, but they are not naturally experts in child safety and development. (Some privacy advocates specialize in the topic—I’m referring to the non-specialists). Child safety and wellbeing laws shouldn’t start with a privacy framework; there are too many tradeoffs and pitfalls that will be disregarded if the law prioritizes privacy considerations. The AADC was doomed from the start because it didn’t assemble the right experts to navigate the tradeoffs. (So long as it mandated online age authentication, it was always going to be doomed, but it could have pursued different approaches if the right experts had been part of the conversation).

Case citation: NetChoice LLC v. Bonta, 2023 U.S. Dist. LEXIS 165500 (N.D. Cal. Sept. 18, 2023)

Shout-Outs

The principals responsible for this ruling deserve a special shoutout.

Rep. Buffy Wicks proudly championed and sponsored this unconstitutional law:

Baroness Kidron claims responsibility for the UK Age-Appropriate Design Code. She evangelized Rep. Wicks, and then the California legislature, on the “wisdom” of transplanting the AADC to California. #ProtectTheKids (from Kidron).

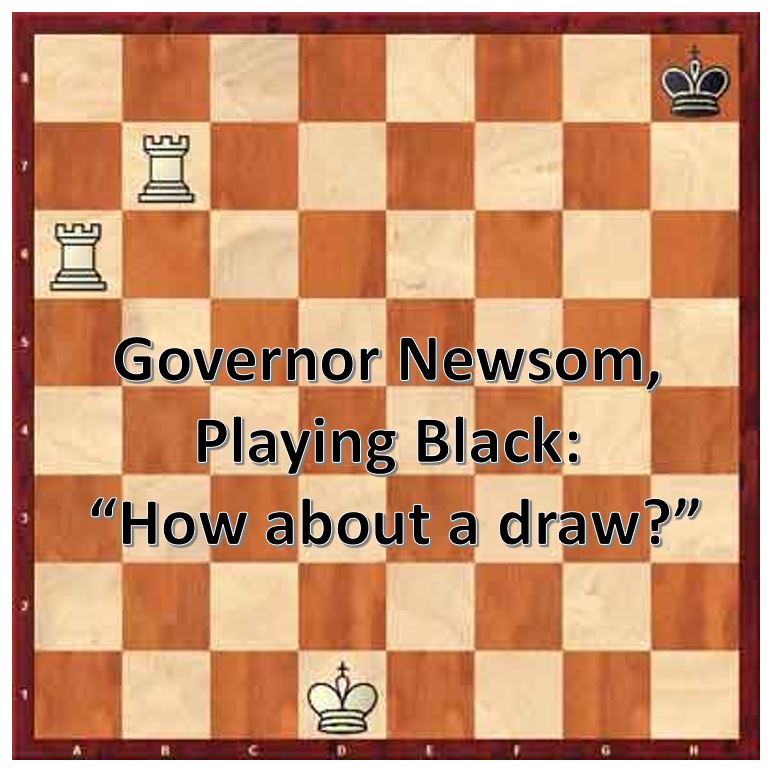

Gov. Gavin Newsom gleefully signed this bill. Worse, in May, he tried to jawbone NetChoice into dropping the constitutional challenge, instructing NetChoice to “stop standing in the way of important protections for our kids and teens.” Yet, as this ruling makes clear, Gov. Newsom was the one standing in the way of the First Amendment’s very important protections for children. Still proud of this law, Gov. Newsom?

And finally, a shoutout to the California legislature for approving this unconstitutional law. Unanimously.

Prior AADC coverage

- Privacy Law Is Devouring Internet Law (and Other Doctrines)…To Everyone’s Detriment

- Minnesota’s Attempt to Copy California’s Constitutionally Defective Age Appropriate Design Code is an Utter Fail (Guest Blog Post)

- Do Mandatory Age Verification Laws Conflict with Biometric Privacy Laws?–Kuklinski v. Binance

- Why I Think California’s Age-Appropriate Design Code (AADC) Is Unconstitutional

- Five Ways That the California Age-Appropriate Design Code (AADC/AB 2273) Is Radical Policy

- Some Memes About California’s Age-Appropriate Design Code (AB 2273)

- An Interview Regarding AB 2273/the California Age-Appropriate Design Code (AADC)

- Op-Ed: The Plan to Blow Up the Internet, Ostensibly to Protect Kids Online (Regarding AB 2273)

- A Short Explainer of How California’s Age-Appropriate Design Code Bill (AB2273) Would Break the Internet

- Will California Eliminate Anonymous Web Browsing? (Comments on CA AB 2273, The Age-Appropriate Design Code Act)

Pingback: Louisiana's Age Authentication Mandate Avoids Constitutional Scrutiny Using a Legislative Drafting Trick-Free Speech Coalition v. LeBlanc - Technology & Marketing Law Blog()