California’s “Protecting Our Kids from Social Media Addiction Act” Is Partially Unconstitutional…But Other Parts Are Green-Lighted–NetChoice v. Bonta

California SB 976, “Protecting Our Kids from Social Media Addiction Act,” is one of the multitudinous laws that pretextually claim to protect kids online. Like many such laws nowadays, it’s a gish-gallop compendium of online censorship ideas: Age authentication! Parental consent! Overrides of publishers’ editorial decisions! Mandatory transparency!

The censorial intent and effect is obvious, but First Amendment challenges to laws like this run into a boil-the-ocean problem. When legislatures flood the zone with multi-pronged censorship, as the Moody opinion encouraged them to do, challengers are severely constrained by time deadlines and word count limits to adequately address everything.

In this case, the legislature enacted a neutron bomb censorship law with a 4 month fuse, forcing everyone to scramble. On the day before the law’s effectiveness, the district court enjoins parts of the law but says that other parts may be constitutional. The court subsequently enjoined all upheld provisions until February 1 to see if the Ninth Circuit will extend the injunction pending its review.

This opinion is particularly painful because the judge repeatedly demonstrates that he is under- or ill-informed about basic social science principles. Ultimately, the challengers have to do more to educate the judge, but the time and space constraints made that hard to do. I’m hoping that the judge will reconsider some of his problematic assumptions as the case proceeds.

Challenge to Age Authentication Mandate Isn’t Ripe

The law takes effect in two stages. Starting January 1, 2025, the law applies when the services have “actual knowledge” that users are minors. Starting January 1, 2027, the law will impose mandatory age authentication on services. The CA AG is obligated to develop regulations about how services can implement age authentication.

(This delegation to rulemaking is designed to sidestep the fact that the California legislature still has zero clue about how to implement age authentication. That’s true even though the legislature previously passed a bill–the AADC–mandating it ¯\_(ツ)_/¯ The AADC is mostly enjoined, in a case also called NetChoice v. Bonta).

The court says the constitutional challenge to the mandatory age authentication (effective January 1, 2027) isn’t prudentially ripe yet. After reviewing the CDA/COPA litigation battles, the court summarizes: “a First Amendment analysis of age assurance requirements entails a careful evaluation of how those requirements burden speech. That type of evaluation is highly factual and depends on the current state of age assurance technology.” We won’t have all of those facts until we see how the rulemaking goes.

As I will explain in my Segregate-and-Suppress article, age “assurance” ALWAYS categorically and impermissibly burdens speech in multiple pernicious ways. As a result, I don’t think any further development of the facts can lead to any different outcome. We’ll get more information about this issue from the FSC v. Paxton case–oral arguments are January 15 🤞.

NetChoice made a variation of my argument, saying that age authentication always acts as a speed bump for readers accessing desired content. The court says that’s not so. The court notes that “many companies now collect extensive data about users’ activity throughout the internet that allow them to develop comprehensive profiles of each user for targeted advertising” and, mining that data, age authentication could “run in the background” without requiring any affirmative steps from readers to complete the authentication.

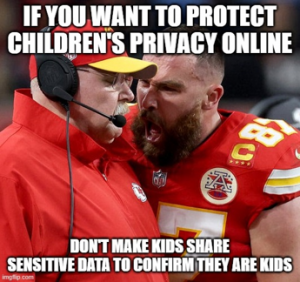

Whoa! I can’t believe the CA AG advanced this position, and I can’t believe the court took it seriously.

Whoa! I can’t believe the CA AG advanced this position, and I can’t believe the court took it seriously.

First, not every regulated service collects enough data to do this well. Second, we definitely don’t want to regulatorily encourage more services to data-mine kids. #Ironic. Third, any automated data mining will routinely make Type I/Type II errors, and it’s also easily gamed by spiking the dataset. Fourth, will this kind of data mining be legal in light of the existing and emerging privacy laws? Or would the CA AG, the CPPA, and the privacy plaintiffs’ bar go after any service deploying this approach to authenticating minors’ ages??? See, e.g., Kuklinski v. Binance.

If this court thinks automated “behind-the-scenes” data mining is a reasonable path towards protecting child safety online, then we’re doomed. Meanwhile, I hope the court’s openness to this kind of age authentication solution acts as the much-needed red-alert to the privacy community about the privacy threats emerging from the child safety regulatory pushes. The privacy invasions caused by mandatory age authentication have the realistic potential to overwhelm any other privacy gains made elsewhere.

If this court thinks automated “behind-the-scenes” data mining is a reasonable path towards protecting child safety online, then we’re doomed. Meanwhile, I hope the court’s openness to this kind of age authentication solution acts as the much-needed red-alert to the privacy community about the privacy threats emerging from the child safety regulatory pushes. The privacy invasions caused by mandatory age authentication have the realistic potential to overwhelm any other privacy gains made elsewhere.

Facial Challenge to Personalized Feeds

You probably will need tissues for this part of the opinion/blog post.

The law regulates the offering of personalized feeds that recommend user-generated or user-shared content based on “information provided by the user, or otherwise associated with the user or the user’s device,” subject to several limitations. The court denies the facial First Amendment challenge because NetChoice didn’t adequately show that “most or all personalized feeds covered by SB 976 are expressive.”

This sounds counterintuitive, and it comes from a problematic reading of the Moody case. The court acknowledges that Moody supports the challengers: “Moody uses sweeping language that could be interpreted as saying that all acts of compiling and organizing speech, with nothing more, are protected by the First Amendment.” However, the court notes (correctly) that the Moody majority opinion hedged its conclusion, saying it wasn’t addressing “feeds whose algorithms respond solely to how users act online—giving them the content they appear to want, without any regard to independent content standards.”

You may recall the source of the hedged line. As Joan Biskupic of CNN explained, Justice Kagan added this line to persuade Justice Barrett to switch from Justice Alito’s opinion to hers. Because it’s the product of backroom dealmaking, the hedged line represents a murky compromise that no one really understands. It appears to contemplate a set of hypothesized technological interactions that may not exist in the real world.

Nevertheless, the hedged line gets this judge to embrace a conclusion that broadly conflicts with the tenor and text of the Moody opinion. So long as there’s a scenario where the regulation of personalized algorithms is permitted by the First Amendment, the Moody opinion indicates that the court should deny the facial First Amendment challenge. This judge doesn’t seem to care whether that scenario is wholly hypothetical and speculative; and the procedural posture of a facial challenge puts the burden on the challenger (in this case, NetChoice) to disprove this speculative hypothetical scenario, which isn’t easy to do.

The court tries to retcon the Moody hedged line to imagine how a non-personalized feed might exist in the real world. Distinguishing print publishers’ editorial discretion at issue in the Tornillo case, the court says:

Personalized feeds on social media platforms are different [than newspapers]. Rather than relying on humans to make individual decisions about what posts to include in a feed, social media companies now rely on algorithms to automatically take those actions.

The court goes on to speculate that the First Amendment would not necessarily protect non-personalized feeds, even if they are purely hypothetical and don’t exist in the real world. For example, the court says:

If a human designs an algorithm for the purpose of recommending interesting posts on a personalized feed, the feed probably does reflect a message that users receiving recommended posts are likely to find those posts interesting. This perspective suggests that an algorithm designed to convey a message can be expressive.

But what if an algorithm’s creator has other purposes in mind? What if someone creates an algorithm to maximize engagement, i.e., the time spent on a social media platform? At that point, it would be hard to say that the algorithm reflects any message from its creator because it would recommend and amplify both favored and disfavored messages alike so long as doing so prompts users to spend longer on social media.

What??? Such a logic pretzel here. First, the court is confused by treating a prioritized message as a “disfavored” message. If the service sets its algorithm to prioritze engagement, then the resulting messages by definition aren’t “disfavored.” They are exactly what the editorial process wanted to favor. Second, optimizing for engagement is a choice many non-Internet editorial publishers make. There’s literally a trope for this: “if it bleeds, it leads.” Third, optimizing for engagement is a dumb editorial choice because it leads to long-term reader dissatisfaction, as has been proven many times, another reason why this discussion is hypothetical, not practical.

The court continues:

To the extent that an algorithm amplifies messages that its creator expressly disagrees with, the idea that the algorithm implements some expressive choice and conveys its creator’s message should be met with great skepticism. Moreover, while a person viewing a personalized feed could perceive recommendations as sending a message that she is likely to be interested in those recommended posts, that would reflect the user’s interpretation, not the algorithm creator’s expression. If a third party’s interpretations triggered the First Amendment, essentially everything would become expressive and receive speech protections

As I already said, the court is wrong to say that a publisher “disagrees” with prioritized content’s message when the publisher editorially prioritizes engagement. The court is also wrong to denigrate the editorial value of how publishers’ prioritization of content items affects how readers consume the content. That’s not just a “user’s interpretation,” that is the publisher’s intended consequence of its editorial choices. This judge needs an education in media studies STAT.

Since the judge is already dwelling in hypothetical universes, why not also take some sideswipes at Generative AI?

Imagine an AI algorithm that is designed to remove material that promotes self-harm. To set up that algorithm, programmers need to initially train it with data that humans have labeled ahead of time as either unacceptably promoting selfharm or not. Thus, when that AI algorithm initially begins to operate, it will reflect those human judgments, and courts can plausibly say that it conveys a human’s expressive choice. But as the algorithm continues to learn from other data, especially if the humans are not supervising that learning, that conclusion becomes less sound. Rather than reflecting human judgments about the messages that should be disfavored, the AI algorithm would seem to reflect more and more of its own “judgment.” Thus, it would become harder to say that the algorithm implements human expressive choices about what type of material is acceptable.

I guess we need to consider extending Constitutional rights to any hypothetical autonomous Generative AI that “expresses its own judgment”… 🤖💬

In a footnote, the court adds: “While the Court uses the word ‘judgment,’ it is not at all clear that, in any relevant sense, an AI algorithm can reason through issues like a human can.” What in the world is the court talking about here? This judicial freakout is another good reason to fear that Generative AI is doomed.

NetChoice also argued that restricting personalized feeds hides content. The court responds that:

all posts are still, in fact, available to all users under SB 976. SB 976 does not require removal of any posts, and users may still access all posts by searching through the social media platforms….the Court is skeptical that speech becomes inaccessible simply because someone needs to proactively search for it. If that were the case, library books would be inaccessible unless a librarian recommends them because libraries hold too many books for a single person to sort through.

Seriously? There’s a huge difference between discovery and search. In particular, people don’t know what to search for unless the discovery element suggests what they should look for. Thus, cutting off the discovery component leaves searchable content in practical obscurity. Surely the First Amendment recognizes that compelled obscurity. The court needs an education in information science STAT.

Seeing the court’s paralysis from the hedged line, it’s obvious how the state benefits from the challenger’s burdens to establish a facial challenge. However, the court’s discussion doesn’t provide a clean bill of Constitutional health for the statute. If the state ever has to defend an as-applied constitutional challenge, the state will have to show that the personalized feed at issue matches the hypothesized feeds in the hedged line–which it almost certainly can’t do, because those hypothesized feeds probably does not exist. So when different burdens of proof apply the Moody case’s other emphatic endorsements of algorithm-encoded editorial judgments would almost certainly pose a major challenge to the law’s constitutionality.

Facial Challenge to Push Notifications

The law restricts the time periods when services can push notifications to minors. The court says “Unlike with personalized feeds, there is little question that notifications are expressive.”

Hold up. The Moody hedged line reserved judgment on “feeds whose algorithms respond solely to how users act online—giving them the content they appear to want, without any regard to independent content standards.” If that exists at all, user-requested push notifications sound pretty close to this, no? In other words, saying that personalized feeds aren’t always expressive but push notifications are…well, that’s a choice. ¯\_(ツ)_/¯

(To be clear, push notifications are expressive because they are just another modality to publish content. I’m objecting to the inconsistency of the court’s internal “logic”).

The court says that the restrictions on push notifications are content-neutral, even though the law only applies to some publishers and not others (publishers of consumer reviews are excluded). I didn’t understand the ourt’s discussion here. I think differential treatment among different types of publishers should trigger strict scrutiny because they ultimately depend on the content published by each.

In any case, the court applies intermediate scrutiny but puts the burden of proof on the state (I didn’t understand why). The court says the state has an important government interest in protecting children’s health. However, the court says “in claiming an interest in protecting children, governments must go beyond the general and abstract to prove that the activities they seek to regulate actually harm children.” 🎯 The court says “the provisions are extremely underinclusive” because the ban only applies to certain services, while notifications from unregulated services could be just as disruptive. Thus, “by allowing notifications from non-covered companies, SB 976 undermines its own goal. As a result, SB 976 appears to restrict significant amounts of speech for little gain.” 💥 Enjoined.

In any case, the court applies intermediate scrutiny but puts the burden of proof on the state (I didn’t understand why). The court says the state has an important government interest in protecting children’s health. However, the court says “in claiming an interest in protecting children, governments must go beyond the general and abstract to prove that the activities they seek to regulate actually harm children.” 🎯 The court says “the provisions are extremely underinclusive” because the ban only applies to certain services, while notifications from unregulated services could be just as disruptive. Thus, “by allowing notifications from non-covered companies, SB 976 undermines its own goal. As a result, SB 976 appears to restrict significant amounts of speech for little gain.” 💥 Enjoined.

Facial Challenge to Default Settings

The law restricts five defaults settings that services must set. Three overlap the prior discussion: two relate to personalized feeds (not enjoined) and one relates to push notifications (enjoined). The other two are not enjoined.

Parental Consent for Seeing Number of “Likes”

The court doesn’t enjoin this default setting: “It far from obvious that this setting implicates the First Amendment at all because the underlying speech is still viewable. Further, the Court sees little apparent expressive value in displaying a count of the number of total likes and reactions.” If intermediate scrutiny applied, it would “easily” survive. “By removing automatic counters, the setting only makes it harder to fixate on the number of likes received and therefore discourages minors from doing so. Finally, since the underlying reactions are still viewable, virtually no speech has been blocked.”

The court needs an education on how metadata is expressive, STAT.

The court sidesteps the biggest problem with this default setting, the parental consent requirement. I will explain in my Segregate-and-Suppress paper that, among other problems with parental consent, services have no viable way of authenticating parental status. This court doesn’t care because it doesn’t value the underlying speech, but at some point the courts will have to wrestle with this extremely problematic issue.

Default Setting That Only Friends Can Comment on a Child’s Post

The court says this restriction on comments is speech-restrictive but content-neutral. The court says this survives intermediate scrutiny:

It is well-known that adults on the internet can exploit minors through social media, and implementing a private mode would reduce that danger. It is not particularly restrictive because the minor can still speak to any user she wishes to if that user requests to connect and the minor accepts. And the ability for users to request to connect with minors leaves open adequate channels of communication.

I mean…there’s so much wrong with this passage. We don’t want to encourage minors to connect with more strangers, which arguably this ban would do. Plus, forcing individuals to comment somewhere else is a major speech restriction. Recall that Trump tried to justify his Twitter blocks by saying that users could still post elsewhere on Twitter, and the Second Circuit rejected that argument. Once again, the court is tone-deaf about the information science implications of its analysis.

Compelled Disclosures

The law requires services to disclose the number of minors on their services, the number of parental consents received, and the number of minors who have the default settings in place. The court says Zauderer doesn’t apply because these disclosures aren’t commercial speech under the Bolger test. That’s correct, but the Supreme Court recently has been treating all corporate speech as Zauderer-eligible. The court explains:

the compelled information does not seem commercially relevant. It is not like terms of service that a consumer might be interested in when deciding whether to use a social media platform. Nor does it give much insight into how covered entities run their social media platforms; rather, the disclosures report how users behave on those platforms. The disclosures also say nothing about the quality of features on those platforms that might be relevant to consumers deciding between different platforms

I take the position that TOSes aren’t ads either.

With Zauderer out of the way (yay!), the court says the disclosure obligation triggers strict scrutiny because it is content-based. The court says it’s not a narrowly tailored obligation: “The Court sees no reason why revealing to the public the number of minors using social media platforms would reduce minors’ overall use of social media and associated harms. Nor does the Court see why disclosing statistics about parental consent would meaningfully encourage parents to withhold consent from social media features that might cause harm.”

Implications

The CA AG took a victory lap on this opinion, but that seems premature. The court sidestepped the age authentication issue and deferred to the law based on one hedged line from Moody and the burden of proof in facial challenges.

Also, let’s not lose sight of the fact that parts of the law were in fact enjoined as likely unconstitutional. Great job, California legislature! It continues to self-actualize as a censorship manufacturing machine.

Also, let’s not lose sight of the fact that parts of the law were in fact enjoined as likely unconstitutional. Great job, California legislature! It continues to self-actualize as a censorship manufacturing machine.

The court partially refused the preliminary injunction on Dec. 31, and NetChoice immediately appealed the ruling to the Ninth Circuit. On January 2, the court enjoined the law for 30 days to give the Ninth Circuit a little time to decide whether it wants to extend the injunction pending its adjudication. The court explained why it issued this short-term injunction despite denying the longer-term injunction:

the First Amendment issues raised by SB 976 are novel, difficult, and important, especially the law’s personalized feed provisions. If NetChoice is correct that SB 976 in its entirety violates the First Amendment—although the Court does not believe that NetChoice has made such a showing on the current record—then its members and the community will suffer great harm from the law’s restriction of speech. Also, as to NetChoice’s members specifically, many may need to make significant changes to their feeds. Likewise, if NetChoice is correct in its argument, the public interest would tip sharply in its favor because there is a strong interest in maintaining a free flow of speech. Given that SB 976 can fundamentally reorient social media companies’ relationship with their users, there is great value in testing the law through appellate review.

(The real villian in this story is the California legislature putting this law in place with less than 4 months of lead time, forcing everyone–including the district court and Ninth Circuit judges–to scramble. A lot of lawyers and clerks did NOT have a happy holidays due to this law).

I have no idea how the Ninth Circuit will view these rulings. I could easily see the Ninth Circuit reaching the opposite conclusion on each and every point. Ultimately, that highlights the limitations of the Moody case because judges have a lot of discretion to read it however they see fit.

Case Citation: NetChoice v. Bonta, 2024 WL 5264045 (N.D. Cal. Dec. 31, 2024) and NetChoice v. Bonta, No. 5:24-cv-07885-EJD (N.D. Cal. Jan. 2, 2025).

Pingback: 2024 Internet Law Year-in-Review - Technology & Marketing Law Blog()