New Article: “Content Moderation Remedies”

I’m excited to share my latest paper, called “Content Moderation Remedies.” I’ve been working on this project 2+ years, and this is the first time I’m sharing the draft publicly. I think many of you will find it interesting, so I hope you will check it out.

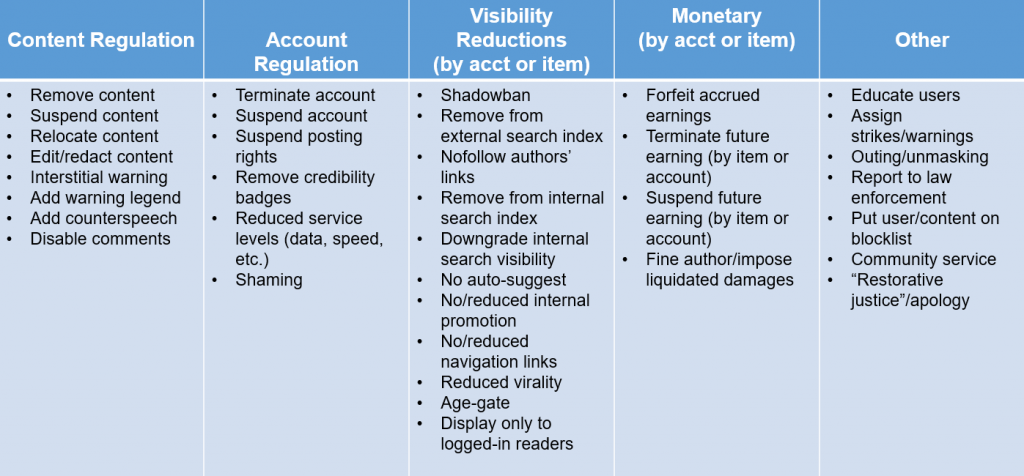

The paper addresses the range of content moderation options between “leave up” and “remove.” Many such moderation/”remedial” options between those two endpoints have actually be deployed in the field. This chart, one of the project’s key deliverables, shows how I taxonomized dozens of options:

(I loved digging into the mind-blowing remedial experiments by 1990s services, such as the now-mostly-forgotten “toading” remedy).

The paper then addresses the natural follow-up question: which of these multitudinous options are the “best”? The usual law professor answer: “it depends.” The paper walks through a normative framework showing why there isn’t a single “best” remedy and why it’s likely that each community will prefer different remedies that reflect their own audiences’ needs and editorial priorities.

I expect this paper will interest many industry participants. Many Internet services have explored and adopted various non-removal remedies, but I’m not sure how many services have approached this issue systematically. Services can benefit from carefully reviewing the paper and thinking about what options might be available to them, how those options might work better than their current approaches, and how their competitors may be innovating around them. I’m hoping this paper will spur a lot of conversations among trust & safety/content moderation professionals.

I also hope regulators will find this paper interesting, but I’m less optimistic they will. (The paper’s conclusion acknowledges the bleak realities). Ideally, this paper would help regulators realize why they should not hard-code removal as the sole remedy for online problems; and perhaps the paper could spur fresh thinking about how lesser remedies might better balance competing interests. Sadly, the current regulatory discourse is exclusively focused on burning down all user-generated content. If regulators continue on that path, this paper will be mooted entirely because there won’t be any user-generated content to moderate/remediate.

This paper tees up several attractive areas for followup research, which I’m sharing with you as possible paper topic ideas because I don’t plan to tackle them. Two I’ll highlight:

1) The paper only glibly addresses about how remedies could be combined to reach better outcomes than any single remedy could achieve. I think this would be a great area for future investigation, especially empirical studies and A/B tests.

2) This paper addresses a slice of a broader topic about how private entities should establish remedial schemes for rule violations by their constituents. Examples of other entities facing similar issues: religious organizations; sports leagues; and membership organizations like fraternities/sororities. Perhaps I missed it, but I never found any precedent literature providing a normative framework for how organizations like these should think about remedial structures. It’s a big hairy topic, way beyond my expertise, but someone might be able to write a career-defining work filling that gap.

This paper is still in draft form, so I’d gratefully welcome your comments. I’d also welcome the opportunity to present on this topic to any interested audience.

A note about placement: I’ve published in the Michigan Technology Law Review before, and I’m happy to do it again. However, I thought this paper would interest general journals, which didn’t happen this time. This placement cycle was unusual due to articles piled up from the 2020 shutdown plus the journals’ remote operations. I got ghosted by a majority of the law reviews I submitted to, even after multiple expedites. I decided that my priority was to close this project out because it’s already been incubating 2+ years and I have other projects in queue. Thus, rather than worrying about possible different homes in a future placement cycle, I am moving on.

The paper abstract:

This Article addresses a critical but underexplored topic of “platform” law: if a user’s online content or actions violate the rules, what should happen next? The longstanding expectation is that Internet services should remove violative content or accounts from their services, and many laws mandate that result. However, Internet services have a wide range of other options—what I call “remedies”—they can use to redress content or accounts that violate the applicable rules. The Article describes dozens of remedies that Internet services have actually imposed. The Article then provides a normative framework to help Internet services and regulators navigate these remedial options to address the many difficult tradeoffs involved in content moderation. By moving past the binary remove-or-not remedy framework that dominates the current discourse about content moderation, this Article helps to improve the efficacy of content moderation, promote free expression, promote competition among Internet services, and improve Internet services’ community-building functions.