Does the First Amendment Permit Government Actors to Manage Social Media Comments?–Tanner v. Ziegenhorn

This case involves Tanner’s comments on the Arkansas State Police’s Facebook page. The court’s ruling raises interesting, but troubling, questions about any government actor’s ability to enable reader comments on social media.

The Manually Deleted Comment

One State Police post to Facebook referenced a police officer who Tanner had interacted with. Tanner commented “this guy sucks,” but the post “contained no profanity, and it was on topic with the substance of the post.” The Facebook page administrator initially deleted the comment. After Tanner complained, 9 hours later the State Police allowed Tanner to repost the comment, and the page administrator admitted that she made a mistake removing the comment. The jury held that Tanner’s comment wasn’t deleted because of his views (instead, it’s because he expressed the views using the word “sucks”). The court rejected Tanner’s First Amendment claim over this comment deletion because the “State Police’s deletion of one comment would not, the Court finds, chill a person of ordinary firmness. That person would post again or complain, as Tanner did. And the State Police corrected its action.”

The Block on Commenting

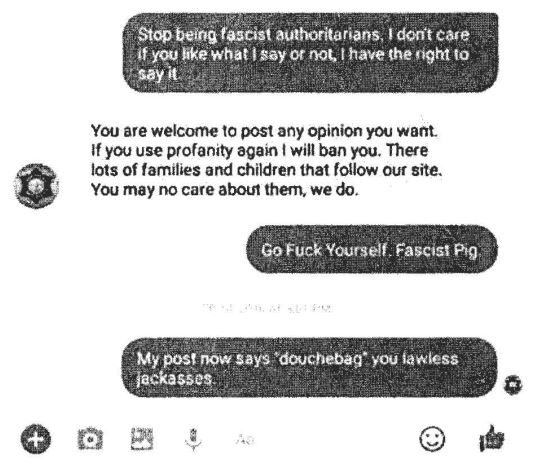

Tanner posted a second comment that implied that the police officer in question had filed a false report about him. “While not entirely on topic, this comment also abided by the page’s terms and conditions.” However, as discussed below, other comments were filtered out by Facebook’s filter, and that spurred this private message exchange:

In response to this exchange, the State Police blocked Tanner from commenting. His second comment was deleted or hidden due to the block. The jury held that the State Police didn’t discriminate against Tanner based on his views.

The court previously held that the Facebook page was a designated public forum, and “Profanity usually doesn’t justify governmental action against speech in a public forum.” The court says:

the agency acted against him for speaking. Tanner profanely criticized the State Police for the deletion of his comments. That was protected speech…. the agency’s decision to block Tanner was an adverse action that would chill a person of ordinary firmness from continuing in the activity. Indeed, he was barred from continuing with his critical private messages and public comments.

Thus, the court says the State Police can ignore Tanner’s private messages [but if the messages constitute petitioning, is that true?], but the State Police cannot “block Tanner from participating in its designated public forum based on his profane private messages.”

The Automatically Filtered Comments

Tanner had four comments screened by Facebook’s automated filters. The State Police set the configurable filter to “strong,” and then manually added the following supplemental words to the filter: “jackass”, “pig”, “pigs”, “n*gga”, “n*gger”, “ass”, “copper”, and “jerk.” Tanner’s screened comments used the words “douche,” “douchebag,” or “pig.” Presumably “douche” and “douchebag” triggered Facebook’s standard community moderation filter or its “strong” filter option for page owners. “Pig” got filtered solely due to the manual supplement.

Tanner had four comments screened by Facebook’s automated filters. The State Police set the configurable filter to “strong,” and then manually added the following supplemental words to the filter: “jackass”, “pig”, “pigs”, “n*gga”, “n*gger”, “ass”, “copper”, and “jerk.” Tanner’s screened comments used the words “douche,” “douchebag,” or “pig.” Presumably “douche” and “douchebag” triggered Facebook’s standard community moderation filter or its “strong” filter option for page owners. “Pig” got filtered solely due to the manual supplement.

Regarding this filtering, the court says:

Given the constant presence of children in the State Police’s Facebook audience, as well as the family-friendly aim of the page, the agency’s desire to filter out obscenities is both reasonable and compelling….

[However,] the Court has two concerns about the State Police’s filter choice. First, the choice is overbroad. The State Police doesn’t know what words it is actually blocking. This information is apparently unavailable. Insofar as the testimony disclosed, Facebook’s community standards might filter out some words even if the State Police turned the page’s profanity filter off. The Court understands that Facebook has its own baseline community standards and changes them regularly. The State Police can’t do anything about that. Facebook’s control of which words it alone will and will not tolerate, though, doesn’t free the State Police from complying with the First Amendment in the filtering decisions the agency can make. In these circumstances, if further study yields no additional information, then the State Police must consider turning the profanity filter off, or selecting a weak or medium setting, supplemented with a narrowly tailored list of obscenities that it wants to block. The Court leaves the specifics to the agency. The Court holds only that the State Police’s current filter choice is not narrow enough for this designated public forum.

Second, there is no plausible explanation for the words “pig”, “pigs”,” copper”, and “jerk” being on the State Police’s list of additional bad words other than impermissible viewpoint discrimination….the additional words chosen by the State Police when it set up the page stopped some of his comments from being posted publicly due to their anti-police connotations. There was really no dispute about this fact. The slang terms “pig”, “pigs”, and “copper” can have an anti-police bent, but people are free to say those words. The First Amendment protects disrespectful language. And “jerk” has no place on any prohibited-words list, given the context of this page, the agency’s justification for having a filter, and the harmlessness of that word. Though some amount of filtering is fine in these circumstances, the State Police’s current list of specific words violates the First Amendment

Implications

This is only a district court opinion. It’s not the final word on the matter. Still, it shows several structural problems with government actors trying to run social media accounts:

This is only a district court opinion. It’s not the final word on the matter. Still, it shows several structural problems with government actors trying to run social media accounts:

- the court says that the State Police may be violating the First Amendment by piggybacking on the Internet service’s standard content moderation techniques. This court doesn’t suggest that Facebook’s standard content moderation efforts are always constitutionally infirm, but isn’t that the logical dénouement to the court’s analysis? The actual details are opaque to all government actors and out of their control. Thus, the default content moderation policies, even if initially validated as compliant with the First Amendment, can change in ways that unexpectedly reach Constitutionally protected speech at any time. Furthermore, if Facebook’s content moderation policies in fact encode viewpoint discrimination (and there’s no doubt that they do), then the consequences are that the viewpoint bias will affect the comments on the State Police’s page. That seems to unavoidably create First Amendment problems.

- the court implies that customized word filters are hugely problematic because they inevitably will reflect viewpoint bias. We saw a good example of this in PETA’s recent lawsuit against the National Institutes of Health and the Department of Health and Human Services for banning anti-vivisection words on their social media pages. The blocked word list clearly targeted PETA’s views (see graphic on the right), just like the State Police’s banned words did. I wish the court walked us through why some words were OK and others weren’t. If I were a government actor, I would be extremely nervous about entering any words on a restricted list.

- the State Police will have difficulty maintaining civil discourse among their social media comments if they can’t ban profanity or juvenile name-calling like “sucks” or “jerk.” They certainly can’t keep the page “family-friendly.” This downward spiral is endemic in the Florida and Texas must-carry bills too. With respect to everything that the State Police can’t do here, those laws probably mean that all private services won’t be able to do either.

Given the intractability of simultaneously avoiding the First Amendment problems while not devolving into a cyber-cesspool, the only clearly Constitutional solution for the State Police, and indeed any government actors, is to avoid running a designated public forum online. In other words, the best option is likely to turn off user comments altogether.

There are other reasons to reach this conclusion. In Australia, for example, government actors are dropping social media interactivity out of fear that they might be held liable for any defamatory user posts.

Add the two developments together, and it’s clear that government actors crave unfiltered access to their constituents on social media, but they can’t effectively manage the legal consequences of allowing readers to talk back. So I expect that we will increasingly see government actors turn their social media accounts into one-way publication media, i.e., they will broadcast propaganda without any accountability from contrary views. This is one of the many reasons why I continue to disfavor having government actors on social media at all. If they can’t be fact-checked on the service, then they will lie with impunity–to everyone’s detriment. So while I’m glad to see Tanner’s First Amendment rights vindicated here, the government’s countermoves will inevitably lead to less government accountability.

Case citation: Tanner v. Ziegenhorn, 2021 WL 4502080 (E.D. Ark. Sept. 30, 2021). The jury verdict form.