Comments on Rep. Gosar’s “Stop the Censorship Act,” Another “Conservative” Attack on Section 230

At this point, many “conservatives” favor government regulation of the editorial practices of Internet companies. As a result, proposals coming from DC “conservatives” that reference “censorship” in their titles almost certainly are designed to embrace, not prevent, censorship. For example, I proposed renaming Sen. Hawley’s “Ending Support for Internet Censorship Act” as “Support for Internet Censorship Act,” because that’s exactly what the bill does. Similarly, with Rep. Gosar D.D.S.’s “Stop the Censorship Act,” I think a proper name would be simply “Censorship Act.” Truth in advertising.

At this point, many “conservatives” favor government regulation of the editorial practices of Internet companies. As a result, proposals coming from DC “conservatives” that reference “censorship” in their titles almost certainly are designed to embrace, not prevent, censorship. For example, I proposed renaming Sen. Hawley’s “Ending Support for Internet Censorship Act” as “Support for Internet Censorship Act,” because that’s exactly what the bill does. Similarly, with Rep. Gosar D.D.S.’s “Stop the Censorship Act,” I think a proper name would be simply “Censorship Act.” Truth in advertising.

Rep. Gosar’s HR 4027 was introduced in Congress July 25, 2019, but the text didn’t appear in Congress.gov until yesterday (August 14). I do not understand it took 3 weeks for the bill’s text to become public. In the interim, the bill has caused some confusion. When introduced, Rep. Gosar issued a press release but didn’t include the text. Unsurprisingly, the press release wasn’t 100% accurate. That sparked several news reports that responded to the press release but were themselves imprecise because they weren’t grounded in the text.

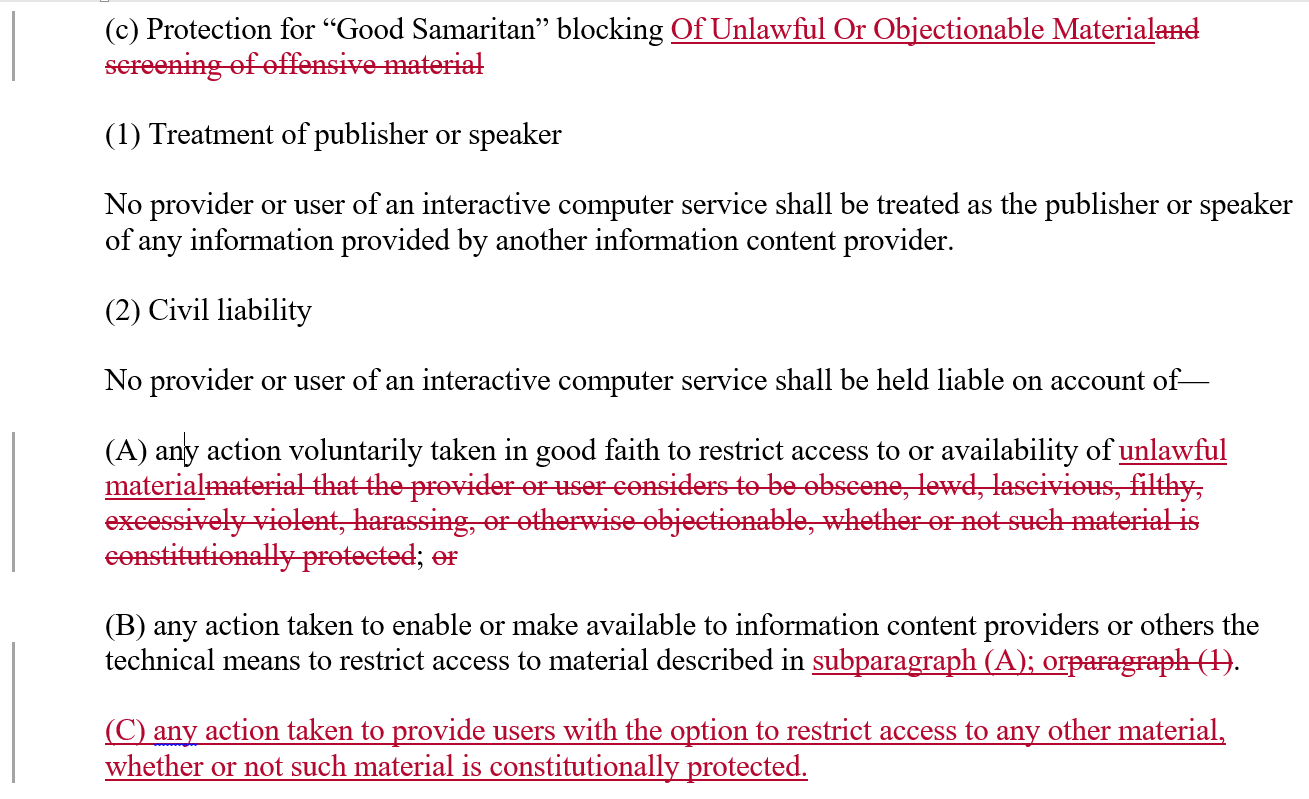

Now that the text is public, we can finally do a well-informed evaluation. The bill proposes three substantive changes to Section 230(c)(2). First, it would limit the 230(c)(2)(A) safe harbor for filtering/blocking decisions only to “unlawful” content, rather than the current much broader scope of “objectionable” content. Second, it would limit the 230(c)(2)(B) safe harbor for providing filtering instructions only to “unlawful” content. Third, it would create a new safe harbor for providing users with the ability to restrict access to material. I did a redline showing its proposed changes to Section 230(c):

This bill is terrible in many ways. Among other problems, it grossly misunderstands Section 230’s mechanics, its desired policy consequences would be horrible, and it is misdrafted to advance those objectives. Some of the bill’s lowlights:

Internet Services Rarely Rely on Section 230(c)(2)(A) Any More. Section 230(c)(2)(A) doesn’t matter to most Internet services. As I’ve explained before, as a gross stereotype, Section 230(c)(1) protects “leave-up” decisions, and Section 230(c)(2)(A) protects “removal” decisions. In general, content removal decisions trigger far fewer lawsuits than leave-up decisions.

In the case of removals, the most likely plaintiff is the user whose content has been removed. Those users often don’t have enough at stake to sue, and they will have to navigate around the service’s TOS and its many provisions protecting the service.

Meanwhile, courts have narrowed the scope of Section 230(c)(2)(A)’s safe harbor to the point where very few services rely on its safe harbor. Instead, courts routinely hold that Section 230(c)(1), instead of Section 230(c)(2)(A), preempts users’ suits over removal decisions, so most Internet services rely on that.

As a result, amending Section 230(c)(2)(A) won’t likely change the outcomes for users who experience removals. In other words, the bill’s revisions to Section 230(c)(2)(A) aren’t likely to advance the drafters’ policy objectives. It’s perplexing, and possibly embarrassing, that the bill drafters apparently misunderstood the role Section 230(c)(2)(A) actually plays in the ecosystem. Oops.

Anti-Threat Vendors Rely on Section 230(c)(2)(B), But This Bill Would Eviscerate Their Safe Harbor. While Section 230(c)(2)(A) has faded in importance, Section 230(c)(2)(B) remains a vital protection for anti-threat vendors, such as vendors of anti-spam, anti-spyware, anti-malware, and anti-virus software. Every time these vendors make a classification decision that will block their users’ access to third party software or content, they face potential lawsuits. Fortunately, in the Zango v. Kaspersky ruling from a decade ago, the Ninth Circuit held that Section 230(c)(2)(B) applied to those lawsuits. In practice, that ruling ended lawsuits by spammers, adware vendors, and other producers of threats against software vendors over blocking and classification decisions.

The bill would implicitly overturn Zango v. Kaspersky and instead provide a safe harbor only for blocking “unlawful content.” As a result, vendors who produce software or content that is pernicious/unwanted, but not technically illegal, would have much greater legal leverage to challenge any classification decisions. This has at least two bad consequences: (1) anti-threat software will become substantially less useful to users because they let through more unwanted stuff, and (2) there will be a lot more litigation over classification decisions, which will raise defense costs and make the anti-threat vendors scared to block anyone. So instead of calling this bill “Stop the Censorship Act,” it might be more accurate to call it the “Stop Protecting Consumers from Spam, Spyware, Malware, and Viruses Act.”

The bill would also remove the safe harbor for parental control software vendors that block children’s access to content that is lawful but nevertheless inappropriate for kids. If this changes the vendors’ behavior, the bill seemingly would make parental control software useless. That outcome would be rich in historical irony, because Congress wanted Section 230 to spur the adoption of self-regulatory efforts to keep kids safe online–through technologies like parental control software. Indeed, Section 230(d) (added a couple years after Section 230’s initial passage) *requires* “interactive computer services” to notify customers about the availability of parental control software (a provision that is widely ignored, but whatever). This bill would directly undermine one of Section 230’s principal policy goals, so perhaps it should be renamed the “Stop Keeping Kids Safe Online Act.”

What Is “Unlawful” Content? The bill doesn’t define the term “unlawful content,” and its meaning isn’t self-evident. Some possibilities of what it might mean:

- Most likely the drafters meant that “unlawful” content only refers to content categorically outside of U.S. Constitutional protection. That includes things like child porn, obscenity, true threats/incitement to imminent violence, certain types of speech incident to criminal conduct, and possibly copyright infringement. Note that some other standard First Amendment exclusions, such as defamation, enjoy substantial procedural limits required by the First Amendment, so it’s not clear how to categorize that kind of content.

- Any content that creates potential tort exposure. This would include a wide but indeterminate array of content.

- Any content that is illegal or tortious under any law anywhere on the globe. In practice, that would be virtually all content.

Due to the term’s imprecision, it’s hard to judge the scope of the suppressable content that would be consistent with the redefined safe harbor.

Limiting Content Filtering to “Unlawful Content” Would Be Stupid. Let’s assume the bill would limit the Section 230(c)(2) safe harbors only to removing Constitutionally unprotected content. If so, removing pernicious but legal content would no longer get the safe harbor. If Internet services actually changed their behavior due to this reshaped safe harbor, they would be overrun by trollers, spammers, and miscreants, which would crowd out all productive conversations. In other words, this bill seeks to make Internet services look more like 8Chan. Perhaps this bill should be renamed the “I ❤ 8Chan Act.”

What Does It Mean to Give Users “Options to Restrict Access” to Content? The bill proposes a new safe harbor for putting more filtering power in users’ hands. Putting aside the filter bubble risk, on balance that sounds like it could be a good thing.

But what does it mean to give users “options to restrict access”? The press release expressly cites three examples: Google SafeSearch, Twitter Quality Filter or YouTube Restricted Mode (I retained the quirky italicization from the press release).

Wut? Google SafeSearch is on by default, so does this means that Google’s default search always will qualify for the new 230(c)(2)(C) safe harbor even though the SafeSearch routinely blocks and downgrades lawful content? I don’t know much about Twitter’s Quality Filter or how widely it’s used. Surely the reference to YouTube’s Restricted Mode can’t be right–after all, having his videos restricted triggered Prager University to sue Google (unsuccessfully). Or maybe the drafters didn’t realize that?

All three examples involve coarse filters–essentially, they are either on-or-off. So if a binary on/off filtering tool qualifies as giving users “options” to filter, then what personalization or filtering tool wouldn’t qualify for 230(c)(2)(C)?

Because many services give users options to personalize the content they see in some way or another, 230(c)(2)(C) seemingly could swallow up the rest of the bill and make the changes to 230(c)(A) and (B) functionally irrelevant. For example, because installing anti-threat software or parental control software is a binary “option,” maybe those services gain back via 230(c)(2)(C) everything that they seemingly lost in the proposed revisions to 230(c)(2)(B)…? But it’s hard to say that most filtering software puts true “options” into the hands of users–in virtually all cases, anti-spam software is a binary on/off, so the real filtering power remains in the service’s classification decisions. So if 230(c)(2)(C) is broad enough to pick up binary options, I don’t understand what the drafters think they are accomplishing.

[Note the odd grammar of 230(c)(2)(C). The safe harbor only applies to “actions taken to provide” users with filters. It doesn’t say that the safe harbor applies to the actual filtering decisions. I’m assuming it does because that’s the only sensible outcome. If the safe harbor doesn’t protect filtering decisions, I have no idea what would be covered by this safe harbor.]

* * *

Last month, a huge coalition of Section 230 experts released seven principles to evaluate proposed amendments to Section 230. I’ll use the principles to evaluate Rep. Gosar’s bill:

- Principle #1: Content creators bear primary responsibility for their speech and actions. The bill doesn’t directly address this issue because it focuses on removals, not leave-up decisions.

- Principle #2: Any new intermediary liability law must not target constitutionally protected speech. Arguably, the bill doesn’t violate this principle because it seeks to protect Constitutionally protected speech from editorial filtering. But it advances that goal at the expense of the Internet services’ editorial discretion, so it also seeks to restrict the constitutionally protected speech of Internet services.

- Principle #3: The law shouldn’t discourage Internet services from moderating content. It absolutely tries to discourage Internet services from moderating content in the worst possible way.

- Principle #4: Section 230 does not, and should not, require “neutrality.” The bill doesn’t expressly address neutrality, but by forcing Internet companies to treat pro-social and anti-social content equally, the bill creates the kind of false equivalency that violates this principle.

- Principle #5: We need a uniform national legal standard. The bill doesn’t directly address this issue. However, by circumscribing Section 230(c)(2)’s shield, it would newly expose defendants to a wide range of diverse state law claims–something we saw frequently when spammers and adware vendors sued anti-threat software vendors. So the bill violates this principle sub silento.

- Principle #6: We must continue to promote innovation on the Internet. The bill drafters might think they are promoting innovation with the new 230(c)(2)(C) safe harbor, which tries to push more filtering to user-controlled options and thus might spur filtering innovations. I don’t think that would be the result, especially if a binary on-off option satisfies the safe harbor. However, I don’t think this bill has the same pernicious anti-innovation effects that Sen. Hawley’s bill would have.

- Principle #7: Section 230 should apply equally across a broad spectrum of online services. The bill doesn’t directly implicate this issue. However, by mucking with Section 230(c)(2)(B), the bill would actually newly expose anti-threat vendors to liability that might not be faced by other players in the ecosystem.

On the plus side, this bill doesn’t run afoul of all the principles. On the minus side, this bill directly, squarely, and perniciously violates several principles, especially Principles #3 and 4. That should be a huge red flag.

* * *

It doesn’t bring me any joy to dunk on a bill like this. Like Sen. Hawley’s bill, it almost certainly was meant as a piece of performative art to “play to the base” rather than as a serious policy proposal. But even as performative art, it highlights how Section 230 is grossly misunderstood by politicians inside DC, and it’s a reminder that modifying Section 230 requires extreme care because even minor changes could have dramatic and very-much-unwanted consequences.

Even if there are merits to taking a closer look at Section 230, proposals like this don’t aid that process. Instead, by perpetrating myths about Section 230 and politicizing Section 230, bills like this degrade the conversation. They move us yet closer to the day when Congress does something terrible to Section 230 for all of the wrong reasons.

[SPECIAL SHOUTOUT TO REPORTERS: If I’m right that Sen. Hawley’s bill and this bill are performative art, not serious policy proposals, you do a HUGE disservice to your readers and the world when you treat the bill authors as credible information sources on Section 230. In effect, you are helping them amplify their messages to their base while polluting the discourse for everyone else. Of course, the bill drafters know you will do this, so introducing a performative art bill buys them an ill-deserved seat at the discussion. They are intentionally weaponizing your compulsion to present “both sides” against you. Don’t take the bait.]

* * *

Appendix

I offered several alternative names for the bill, so I thought it would be helpful to recap them all here:

“Censorship Act”

“Stop Protecting Consumers from Spam, Spyware, Malware, and Viruses Act”

“Stop Keeping Kids Safe Online Act”

“I ❤ 8Chan Act”