Addiction Lawsuit Against TikTok Can Proceed in Nevada–TikTok v. Nevada District Court

All eyes are on the social media addiction class action lawsuits in California federal and state courts, where the plaintiffs are getting close to conducting trials that pose existential implications for many of the major social media services.

All eyes are on the social media addiction class action lawsuits in California federal and state courts, where the plaintiffs are getting close to conducting trials that pose existential implications for many of the major social media services.

Meanwhile, in Nevada, the Nevada Supreme Court allows the state’s addiction lawsuit against TikTok to proceed as a violation of the Nevada Deceptive Trade Practices Act (NDTPA). It’s a rough ruling for TikTok, but it’s consistent with the social media addiction jurisprudence where the legal precedents as we know them don’t seem to apply.

The court symmarizes the background: “The State alleged that TikTok knowingly designed its social media and shortform online video platform to addict young users, thus inflicting various harms on young users in Nevada, and knowingly made misrepresentations and material omissions about the platform’s safety.” The state particularly called out TikTok’s “(1) low-friction variable rewards (endless scroll and autoplay), (2) social manipulation tools (quantified popularity and coins), (3) ephemeral content, (4) push notifications, (5) visual filters, and (6) ineffective and misleading parental controls and well-being initiatives.”

Jurisdiction

The court upheld personal jurisdiction in Nevada, citing Briskin. I tried to teach Briskin in my Internet Law course this semester and it was absolutely unteachable–except perhaps to illustrate the broad realpolitik answer that online publishers’ jurisdictional challenges are destined to fail.

For example, the Nevada Supreme Court credits the state’s claim that “TikTok’s interactive social media business model depends on capturing users’ attention in order to collect demographic and behavioral data that it then sells to third-party advertisers.” Please help me identify any online publishers who do not depend on capturing users’ attention….

We are persuaded by the reasoning in Lemmon and conclude that the district court properly applied that decision in rejecting TikTok’s CDA § 230 immunity argument. On its face, the State’s complaint does not seek to hold TikTok liable for any third-party content that it publishes. The State’s first NDTPA claim under NRS 598.091 targets TikTok’s alleged own knowingly false statements and omissions to regulators and the public about young users’ safety on the platform. Though the State references problematic third-party content in its complaint—such as TikTok “challenge” trends and videos depicting drugs, sex, and suicide—it does so to support its claims that (1) TikTok made misrepresentations about its enforcement of the platform’s community guidelines and the safety measures that TikTok implements and (2) TikTok knows that young users experience mental, physical, and privacy harms due to their compulsive TikTok use. Therefore, consistent with the Barnes analysis, this claim does not trigger CDA § 230 immunity because the State seeks to hold TikTok liable for its own statements and omissions and resulting duties to users with only a tangential relationship to third-party content.

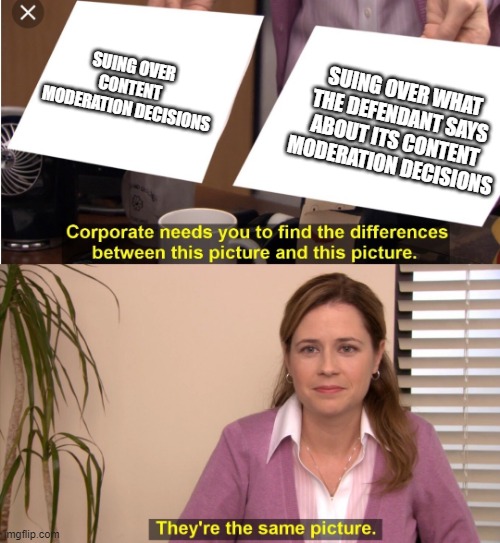

The court accepts the venerable plaintiff move of saying that the lawsuit targets the defendant not for its content moderation decisions, but for how the defendant describes its content moderation decisions. I’ve covered that gambit so many times that I made a meme:

The court continues:

As to the State’s second NDTPA claim under NRS 598.0923, it explicitly targets the design of TikTok’s platform rather than the content of posted videos, alleging that TikTok “willfully committed unconscionable trade practices in designing and deploying” features intended to exploit young users’ lack of knowledge or capacity to appreciate the risks inherent in the platform’s design. That the complaint’s allegations specifically target the platform’s content-neutral design features distinguishes this case from Moody v. NetChoice, LLC, 603 U.S. 707 (2024), a case that TikTok argues supports immunity….unlike Moody, this case does not concern restrictive state laws and the State, as the plaintiff, explicitly does not seek to curtail or alter the mix of third-party content that TikTok publishes—it only purports to challenge the design features that TikTok implements to keep users on the platform as long as possible, no matter the type of third-party content that may appear in a user’s feed.

That some of these features interact with third-party content does not alter that the State seeks to hold TikTok liable for “violating its distinct duty to design a reasonably safe product,” rather than for publishing user content. Thus, the State’s claims arise from TikTok’s alleged duty to provide a reasonably safe social media app rather than any failure to edit or remove third-party content.

The phrase “content-neutral design features” is an obvious oxymoron. Decisions about gathering, organizing, or disseminating content are never “content-neutral.” Those decisions always and necessarily are editorial decisions that result in prioritizing some content at the expense of others. That makes editorial “design features” a synonym for editorial decision-making, which in turn is supposed to be fully protected by both the First Amendment and Section 230.

Also, a synonym for “design features that TikTok implements to keep users on the platform as long as possible” is “product marketing.” Every business–including every publisher–does it.

First Amendment

curating third-party content and moderating that content can amount to protected expressive activity under the First Amendment, per Moody. However, Moody expressly declined to consider how the First Amendment would apply to algorithms and other design features that employ user-interaction data to shape an addictive user experience. Moreover, the State explicitly disclaims any intent to impose liability based on any content or its curation and thus alleges a claim that does not target expressive First Amendment activity.

It is disingenuous for the state to simultaneously disclaim that it is trying to impose liability on TikTok for “content curation” and yet continue to target attributes of TikTok’s publication “design.” They are the same thing.

The court continues:

We also agree with the district court that the First Amendment does not bar the State’s misrepresentation and omission claims, as the First Amendment does not protect inherently misleading commercial speech. Cent. Hudson Gas & Elec. Corp. v. Pub. Serv. Comm’n of N.Y., 447 U.S. 557, 566 (1980). The misleading aspect is key to the State’s claim that TikTok misrepresented or omitted information on its website, in its community guidelines, and in presentations to PTAs and the U.S. Congress. Though TikTok argues that its representations regarding the safety of young users on the platform are protected under the First Amendment as subjective statements of opinion or aspiration, the State points to several affirmative statements that go beyond mere aspiration or opinion, such as statements in the community standards that “[TikTok] do[es] not allow content that may put young people at risk of exploitation, or psychological, physical or developmental harm,” including “child sexual abuse material (CSAM), youth abuse, bullying, dangerous activities and challenges, exposure to overtly mature themes, and consumption of alcohol, tobacco, drugs, or regulated substances.” Thus, the State has asserted claims that are not subject to dismissal based on the First Amendment.

The court cuts major corners here. It is true that the First Amendment doesn’t prevent the regulation of untruthful commercial speech when that is the finally adjudicated result, but at this stage of the lawsuit, that sidesteps the key questions:

- is the state suing over commercial speech? TOS-type disclosures aren’t necessarily commercial speech. See Prager U. v. YouTube (“Defendants’ policies and guidelines are more akin to instruction manuals for physical products.”).

- does the purported commercial speech constitute factual claims instead of puffery? The statements that the court suggests are fact claims sure sound like puffery to me, but the court couldn’t be bothered to justify its classification.

- are the claims actually untruthful? The state should be required to make a showing about the falsity in the complaint, instead of getting a free pass to discovery based on the unevaluated assertions that the defendant’s statements are false. But the court seems to accept that the TikTok statements identitied by the state are false without any evaluation at all.

Case Citation: TikTok, Inc. v. District Court (ex rel State of Nevada), 141 Nev. Adv. Op. 51 (Nev. Supreme Ct. Nov. 6, 2025)

SUPPLEMENT: A reminder that Congress banned TikTok in the US starting January 19, 2025, and the Supreme Court held the First Amendment had to yield to the ban due to Congress’ determinations that TikTok constitutes a national security threat and poses grave privacy risks to its users. We’re coming up on the 10 month anniversary of the ban date and: TikTok is not banned in the US; TikTok is still allegedly committing all of those violations that justified the circumscription of the First Amendment; and the prevailing attitude appears to be 🤷♂️.

SUPPLEMENT: A reminder that Congress banned TikTok in the US starting January 19, 2025, and the Supreme Court held the First Amendment had to yield to the ban due to Congress’ determinations that TikTok constitutes a national security threat and poses grave privacy risks to its users. We’re coming up on the 10 month anniversary of the ban date and: TikTok is not banned in the US; TikTok is still allegedly committing all of those violations that justified the circumscription of the First Amendment; and the prevailing attitude appears to be 🤷♂️.