Big Ruling for Free Speech: Most of Florida’s Social Media Censorship Law (SB 7072) Remains Enjoined–NetChoice v. Attorney General

On Monday, a unanimous three-judge panel of the 11th Circuit issued an important Internet free speech opinion, NetChoice v. Attorney General (a/k/a NetChoice v. Moody). The opinion holds that the key parts of Florida’s social media censorship law (SB 7072) likely violate the First Amendment and should remain enjoined. As the panel summarizes: “social-media companies—even the biggest ones—are ‘private actors’ whose rights the First Amendment protects [cite to Halleck]…their so-called “content-moderation” decisions constitute protected exercises of editorial judgment, and…the provisions of the new Florida law that restrict large platforms’ ability to engage in content moderation unconstitutionally burden that prerogative.”

The opinion also highlights the madness of the Fifth Circuit allowing the Texas social media censorship law to take effect via a 1-line order. This opinion gives the Supreme Court another reason to intervene in the Texas case on the shadow docket; or if the Supreme Court defers the matter, the opinion sets up a likely circuit split that can support granting certiorari.

Longer term, this opinion implies significant limits on the ability of legislatures to regulate Internet services’ editorial operations. Unless/until it gets overridden by an en banc ruling or the Supreme Court, it sends a powerful cautionary message to thousands of legislators drafting anti-Big Tech bills to question the legitimacy of their regulatory efforts.

Though this is a major win for free speech online, the panel unfortunately adopted an overly lax legal standard for evaluating mandatory editorial transparency laws, so it lifted the injunction for some provisions. Some of these provisions may not survive on remand, however.

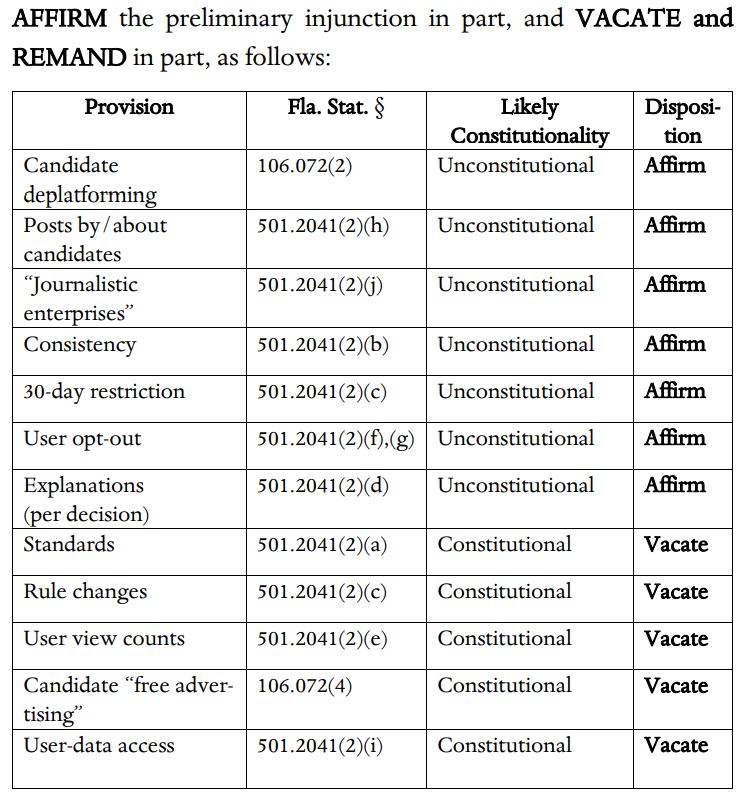

The court helpfully summarized its outcomes:

Do Social Media Make Editorial Judgments Protected by the First Amendment?

The opinion tackles one of the hottest topics in Internet Law: do UGC services engage in editorial functions protected by the First Amendment? The panel answers that question decisively yes.

The court explains (bolding added):

social-media platforms aren’t ‘dumb pipes’: They’re not just servers and hard drives storing information or hosting blogs that anyone can access, and they’re not internet service providers reflexively transmitting data from point A to point B. Rather, when a user visits Facebook or Twitter, for instance, she sees a curated and edited compilation of content from the people and organizations that she follows. If she follows 1,000 people and 100 organizations on a particular platform, for instance, her “feed”—for better or worse—won’t just consist of every single post created by every single one of those people and organizations arranged in reverse-chronological order. Rather, the platform will have exercised editorial judgment in two key ways: First, the platform will have removed posts that violate its terms of service or community standards—for instance, those containing hate speech, pornography, or violent content. Second, it will have arranged available content by choosing how to prioritize and display posts—effectively selecting which users’ speech the viewer will see, and in what order, during any given visit to the site…

the platforms invest significant time and resources into editing and organizing—the best word, we think, is curating—users’ posts into collections of content that they then disseminate to others. By engaging in this content moderation, the platforms develop particular market niches, foster different sorts of online communities, and promote various values and viewpoints.

when a platform removes or deprioritizes a user or post, it makes a judgment about whether and to what extent it will publish information to its users—a judgment rooted in the platform’s own views about the sorts of content and viewpoints that are valuable and appropriate for dissemination on its site…When a platform selectively removes what it perceives to be incendiary political rhetoric, pornographic content, or public-health misinformation, it conveys a message and thereby engages in “speech” within the meaning of the First Amendment.

This isn’t complicated. Of course curatorial efforts, like content moderation, are expressive; and those expressive decisions reflect the curator’s efforts to serve its unique audience. Thus, the panel boils its conclusion down simply: “Laws that restrict platforms’ ability to speak through content moderation therefore trigger First Amendment scrutiny….[if] the decision about whether, to what extent, and in what manner to disseminate third-party content is itself speech or inherently expressive conduct, which we have said it is, then the Act does interfere with platforms’ ability to speak.”

In support of this conclusion, the panel refers to Miami Herald v. Tornillo (what it correctly calls the “pathmarking” case), PG&E, Turner, and Hurley:

Social-media platforms’ content-moderation decisions are, we think, closely analogous to the editorial judgments that the Supreme Court recognized in Miami Herald, Pacific Gas, Turner, and Hurley. Like parade organizers and cable operators, social-media companies are in the business of delivering curated compilations of speech created, in the first instance, by others…When platforms choose to remove users or posts, deprioritize content in viewers’ feeds or search results, or sanction breaches of their community standards, they engage in First-Amendment-protected activity.

The panel distinguishes the PruneYard and FAIR precedents:

- “PruneYard is readily distinguishable….Because NetChoice asserts that S.B. 7072 interferes with the platforms’ own speech rights by forcing them to carry messages that contradict their community standards and terms of service, PruneYard is inapposite.” “Readily distinguishable” is judicial-speak for “not even close.”

- “FAIR may be a bit closer, but it, too, is distinguishable….FAIR isn’t controlling here because social-media platforms warrant First Amendment protection on both of the grounds that the Court held that law-school recruiting services didn’t.” (The court also rejects the “unified speech product” argument).

- “S.B. 7072 interferes with social-media platforms’ own ‘speech’ within the meaning of the First Amendment. Social-media platforms, unlike law-school recruiting services, are in the business of disseminating curated collections of speech….S.B. 7072’s content-moderation restrictions reduce the number of posts over which platforms can exercise their editorial judgment….a law that requires the platform to disseminate speech with which it disagrees interferes with its own message and thereby implicates its First Amendment rights.” In other words, because content moderation is an editorial function, it’s different than the recruiter gatekeeping function performed by higher ed. The extension of FAIR to the publication context has always seemed like a stretch.

- “social-media platforms are engaged in inherently expressive conduct of the sort that the Court found lacking in FAIR…Unlike the law schools in FAIR, social-media platforms’ content-moderation decisions communicate messages when they remove or “shadow-ban” users or content….a reasonable observer witnessing a platform remove a user or item of content would infer, at a minimum, a message of disapproval. Thus, social-media platforms engage in content moderation that is inherently expressive notwithstanding FAIR.”

The panel repeatedly makes this latter point about the services communicating their own messages through content moderation, such as:

A reasonable person would likely infer “some sort of message” from, say, Facebook removing hate speech or Twitter banning a politician. Indeed, unless posts and users are removed randomly, those sorts of actions necessarily convey some sort of message—most obviously, the platforms’ disagreement with or disapproval of certain content, viewpoints, or users. Here, for instance, the driving force behind S.B. 7072 seems to have been a perception (right or wrong) that some platforms’ content-moderation decisions reflected a “leftist” bias against “conservative” views—which, for better or worse, surely counts as expressing a message. That observers perceive bias in platforms’ content moderation decisions is compelling evidence that those decisions are indeed expressive…

we find it unlikely that a reasonable observer would think, for instance, that the reason he rarely or never sees pornography on Facebook is that none of Facebook’s billions of users ever posts any. The more reasonable inference to be drawn from the fact that certain types of content rarely or never appear when a user browses a social-media site—or why certain posts disappear or prolific Twitter users vanish from the platform after making controversial statements—is that the platform disapproves.”

In a footnote, the panel adds: “To the extent that the states argue that social-media platforms lack the requisite ‘intent’ to convey a message, we find it implausible that platforms would engage in the laborious process of defining detailed community standards, identifying offending content, and removing or deprioritizing that content if they didn’t intend to convey ‘some sort of message.’ Unsurprisingly, the record in this case confirms platforms’ intent to communicate messages through their content-moderation decisions—including that certain material is harmful or unwelcome on their sites.”

Elsewhere, the panel has this gem: “private actors have a First Amendment right to be ‘unfair’—which is to say, a right to have and express their own points of view.” The court’s response to those how think a service’s content moderation decisions treats them unfairly? TOUGH. Take your content somewhere else.

The panel keeps hammering the content-moderation-is-speech point because regulatory efforts like Texas’ and Florida’s disregard the expressive value of curation, even as they seek to usurp the curation process to advance the regulators’ interests.

For this reason, then, it doesn’t matter if readers do or don’t impute responsibility for users’ messages to the services. “Consumer confusion simply isn’t a prerequisite to First Amendment protection.”

The panel also rejects the common argument that editorial discretion only counts if it’s exercised ex ante:

The “conduct” that the challenged provisions regulate—what this entire appeal is about—is the platforms’ “censorship” of users’ posts—i.e., the posts that platforms do review and remove or deprioritize. The question, then, is whether that conduct is expressive. For reasons we’ve explained, we think it unquestionably is.

UGC Services Aren’t Common Carriers

The panel emphatically rejects the oft-advanced argument that Internet services are common carriers (emphasis added):

social-media platforms are not—in the nature of things, so to speak—common carriers…While it’s true that social-media platforms generally hold themselves open to all members of the public, they require users, as preconditions of access, to accept their terms of service and abide by their community standards….

because social-media platforms exercise—and have historically exercised—inherently expressive editorial judgment, they aren’t common carriers, and a state law can’t force them to act as such unless it survives First Amendment scrutiny…

Neither law nor logic recognizes government authority to strip an entity of its First Amendment rights merely by labeling it a common carrier. Quite the contrary, if social-media platforms currently possess the First Amendment right to exercise editorial judgment, as we hold it is substantially likely they do, then any law infringing that right—even one bearing the terminology of “common carri[age]”—should be assessed under the same standards that apply to other laws burdening First-Amendment-protected activity.

In other words, the panel recognizes that “common carriage” is just another name for “government censorship.” Thus, the label does not give the government regulation a free pass around the First Amendment.

In a footnote, the panel adds that the common carrier label is incoherent: “Because S.B. 7072 prevents platforms from removing content regardless of its impact on others, it appears to extend beyond the historical obligations of common carriers.”

The panel emphasizes that its conclusion on common carriage doesn’t vary with the publisher’s size. Even monopolist publishers are entitled to First Amendment protection:

The State’s best rejoinder is that because large social-media platforms are clothed with a “public trust” and have “substantial market power,” they are (or should be treated like) common carriers. These premises aren’t uncontroversial, but even if they’re true, they wouldn’t change our conclusion….the Supreme Court has squarely rejected the suggestion that a private company engaging in speech within the meaning of the First Amendment loses its constitutional rights just because it succeeds in the marketplace and hits it big [cite to Tornillo]

Application to SB7072

With respect to SB 7072:

S.B. 7072’s content-moderation restrictions all limit platforms’ ability to exercise editorial judgment and thus trigger First Amendment scrutiny. The provisions that prohibit deplatforming candidates (§ 106.072(2)), deprioritizing and “shadow-banning” content by or about candidates (§ 501.2041(2)(h)), and censoring, deplatforming, or shadow-banning “journalistic enterprises” (§ 501.2041(2)(j)) all clearly restrict platforms’ editorial judgment by preventing them from removing or deprioritizing content or users and forcing them to disseminate messages that they find objectionable.

The consistency requirement (§ 501.2041(2)(b)) and the 30-day restriction (§ 501.2041(2)(c)) also—if somewhat less obviously—restrict editorial judgment. Together, these provisions force platforms to remove (or retain) all content that is similar to material that they have previously removed (or retained). Even if a platform wants to retain or remove content in an inconsistent manner—for instance, to steer discourse in a particular direction—it may not do so. And even if a platform wants to leave certain content up and continue distributing it to users, it can’t do so if within the past 30 days it’s removed other content that a court might find to be similar. These provisions thus burden platforms’ right to make editorial judgments on a case-by-case basis or to change the types of content they’ll disseminate—and, hence, the messages they express.

The user-opt-out requirement (§ 501.2041(2)(f), (g)) also triggers First Amendment scrutiny because it forces platforms, upon a user’s request, not to exercise the editorial discretion that they otherwise would in curating content—prioritizing some posts and deprioritizing others—in the user’s feed. Even if a platform would prefer, for its own reasons, to give greater prominence to some posts while limiting the reach of others, the opt-out provision would prohibit it from doing so, at least with respect to some users.

In contrast, the panel says that the disclosure provisions should be treated differently:

S.B. 7072’s disclosure provisions implicate the First Amendment, but for a different reason. These provisions don’t directly restrict editorial judgment or expressive conduct, but indirectly burden platforms’ editorial judgment by compelling them to disclose certain information. Laws that compel commercial disclosures and thereby indirectly burden protected speech trigger relatively permissive First Amendment scrutiny.

In my paper, I explain why the panel is wrong to disregard the disclosures’ effects on editorial judgment/expressive conduct. In this case, when the disclosures relate to editorial decisions, the disclosure obligations distort editorial judgment by encouraging publishers to make editorial decisions that will please regulators (to avoid drawing investigatory attention) and reduce the risk of weaponizable anecdotes in discovery. This is not true with respect to other commercial disclosures like food labels. As I say many times, speech venues like social media aren’t the same kind of product as cans of corn.

The panel says that there’s no First Amendment implications of requiring Internet services to provide terminated users with access to their data for 60 days after termination. This requirement is similar to many privacy law requirements to provide users with the ability to access, delete, or port their data, so I’m guessing many privacy lawyers were relieved to see this. Nevertheless, the panel says that the plaintiffs can still make a showing on remand that this requirement is unduly burdensome. This showing may be possible. The most likely parties to take advantage of this access right are bad-faith actors like spammers and trolls, and it would be socially wasteful to treat them like legitimate users.

What Level of Scrutiny?

Up to this point, I think the panel’s ruling has been pretty straightforward and obvious, even though it rejects the voluminous pro-censorship FUD directed towards these topics. In contrast, the panel’s opinion gets a lot spicier applying the First Amendment to the law. 🌶

The panel rejects the plaintiffs’ arguments that the law is impermissibly viewpoint-based or speaker-based (in a footnote, the panel says “speaker-based distinctions trigger strict scrutiny—or perhaps face per se invalidation—when they indicate underlying content- or viewpoint-based discrimination”). Instead, the panel does a “more nuanced” provision-by-provision analysis of the scrutiny level.

[Note: though the plaintiffs’ lawyers did a great job, their complaint and initial filings did not contain a provision-by-provision analysis. This approach worked just fine at the district court level, but it left open gaps in the plaintiffs’ argumentation based on the appellate panel’s nuanced analysis. Word count limits on briefs surely had something to do with this–the Florida law contained so much awfulness that it was impossible to talk about everything comprehensively. Still, knowing this case is destined for the Supreme Court, I wonder if it would have been possible to build a sturdier foundation in the complaint and initial briefs?]

The panel says that the content moderation restrictions trigger heightened scrutiny. Some parts, such as the favoritism for journalistic enterprises. are content-based and trigger strict scrutiny. Others, such as the candidate-deplatforming restrictions or algorithm opt-out, are content-neutral because they don’t depend “on the substance of platforms’ content-moderation decisions.” I didn’t understand that latter conclusion. Giving preference to some speakers over others (based on their identity as politicians), and changing how publishers present content, both sound content-based to me. Even so, the panel says these content-neutral provisions trigger intermediate scrutiny because they regulate expressive conduct.

But does this parsing of the scrutiny levels matter? No. The court says that none of the content moderation restrictions would survive either strict or intermediate scrutiny.

With respect to some of the disclosure obligations, which the panel classifies as content-neutral, the panel adopts the Zauderer standard. The panel expressly acknowledges that Zauderer “is typically applied in the context of advertising and to the government’s interest in preventing consumer deception,” which sound like pretty good reasons to not adopt this standard. Nevertheless, the panel says the disclosures would “provide users with helpful information that prevents them from being misled about platforms’ policies.” Hold up–stretching Zauderer this far is both unnecessary and pernicious. If consumers are being misled, standard consumer protection laws are being violated, so this law isn’t needed to fix that marketplace deception. Furthermore, the panel is surprisingly credulous about the state’s justification that these requirements would provide “helpful information.” Where’s the evidence of that? Everyone knows that TOS disclosures are worthless, and this law won’t fix that. Worse, the panel’s standard opens up the door for legislatures to impose mandatory obligations about the editorial practices of other publishers, including newspapers and book publishers, similarly on the (dubious) premise of protecting consumers from being misled. A broad-based disclosure imposed on all publishers’ editorial decisions sounds like a censor’s dream. Finally, the panel didn’t mention the 4th Circuit’s Washington Post v. McManus ruling, which rejected some mandatory disclosures about political advertising. Does this ruling create a circuit split with the 4th Circuit?

Applying the Scrutiny Level

Having concluded that the content moderation restrictions get heightened scrutiny, the panel mercilessly denigrates the state’s proffered justifications for the law 🌶🌶🌶:

- “We think it substantially likely that S.B. 7072’s content-moderation restrictions do not further any substantial governmental interest—much less any compelling one.”

- “there’s no legitimate—let alone substantial—governmental interest in leveling the expressive playing field.” By saying there’s no legitimate interest, the court says that laws designed to “level the expressive playing field” cannot survive even rational basis review.

- “Nor is there a substantial governmental interest in enabling users—who, remember, have no vested right to a social-media account—to say whatever they want on privately owned platforms that would prefer to remove their posts.” This describes all must-carry obligations. According to this panel, must-carry obligations cannot survive intermediate scrutiny.

- “The State might also assert an interest in ‘promoting the widespread dissemination of information from a multiplicity of sources.’… it’s hard to imagine how the State could have a ‘substantial’ interest in forcing large platforms—and only large platforms—to carry these parties’ speech…Even if other channels aren’t as effective as, say, Facebook, the State has no substantial (or even legitimate) interest in restricting platforms’ speech—the messages that platforms express when they remove content they find objectionable—to ‘enhance the relative voice’ of certain candidates and journalistic enterprises” (bold added). Speakers don’t get a guaranteed privilege to reach Facebook’s audience, even if Facebook has the cheapest and most effective way to reach that audience.

- “There is also a substantial likelihood that the consistency, 30-day, and user-opt-out provisions (§ 501.2041(2)(b), (c), (f), (g)) fail to advance substantial governmental interests…it is substantially unlikely that the State will be able to show an interest sufficient to justify requiring private actors to apply their content-moderation policies—to speak—’consistently.'” This is true, but also a reminder that “consistent” content moderation is an oxymoron and an impossibility.

- “there is likely no governmental interest sufficient to justify prohibiting a platform from changing its content-moderation policies” (bold added). Again, this court is saying that such efforts could not survive rational basis review.

- “Finally, there is likely no governmental interest sufficient to justify forcing platforms to show content to users in a ‘sequential or chronological’ order, see § 501.2041(2)(f), (g)—a requirement that would prevent platforms from expressing messages through post-prioritization and shadow banning” (bold added). Again, the court is saying that mandating the presentation order of content likely cannot survive rational basis review. This is a HUGE statement because it implicates many efforts to ban or restrict algorithmic ranking/sorting (which leave chronological order as the only presentation option) or to force Internet services to offer users a reverse-chronological-order option (see, e.g., the Minnesota bill I trashed). This opinion indicates those rules categorically won’t survive the First Amendment. There has been so much regulator interest in controlling or eliminating social media’s content algorithms, and this ruling could stop ALL of those efforts cold.

I can’t stress enough how important these rulings can be if they survive further proceedings. The panel has made it extremely difficult to regulate Internet services’ editorial decisions. I know state legislators normally don’t care whether or not their efforts are constitutional, but they really need to read and digest this opinion before launching further ill-advised bills to control the Internet. And they are going to be bummed/pissed when the legislative analysts score their bills as unconstitutional or overly costly to defend against a constitutional challenge in court.

The panel also questions the law’s tailoring:

§§ 106.072(2) and 501.2041(2)(h) prohibit deplatforming, deprioritizing, or shadow-banning candidates regardless of how blatantly or regularly they violate a platform’s community standards and regardless of what alternative avenues the candidate has for communicating with the public. These provisions would apply, for instance, even if a candidate repeatedly posted obscenity, hate speech, and terrorist propaganda. The journalistic-enterprises provision requires platforms to allow any entity with enough content and a sufficient number of users to post anything it wants—other than true “obscen[ity]”—and even prohibits platforms from adding disclaimers or warnings. As one amicus vividly described the problem, the provision is so broad that it would prohibit a child-friendly platform like YouTube Kids from removing—or even adding an age gate to—soft-core pornography posted by PornHub, which qualifies as a “journalistic enterprise” because it posts more than 100 hours of video and has more than 100 million viewers per year. That seems to us the opposite of narrow tailoring.

Transparency

The panel says that the state has a legitimate interest in seeing that consumers are “fully informed about the terms of [user-data-for-free-services] transaction and aren’t misled about platforms’ content-moderation policies.” OK, but of the panel were doing a proper strict scrutiny, or even intermediate scrutiny, I would vigorously question the means-fit of that goal. Still, even under Zauderer, “NetChoice still might establish during the course of litigation that these [disclosure] provisions are unduly burdensome and therefore unconstitutional.” Indeed, the plaintiffs have not yet invested a lot of energy in doing provision-by-provision deconstructions of each transparency requirement, so I remain hopeful that the lower court will resurrect the injunction when the plaintiffs explain the problems with these provisions more precisely.

To be more specific, in addition to the 60-day access right for terminated users, the panel says the following provisions are likely constitutional:

- “A social media platform must publish the standards, including detailed definitions, it uses or has used for determining how to censor, deplatform, and shadow ban.” This is a mandate to publish editorial policies. As I explain in my article, this is not possible. Publishers make up new policies on the fly, they make legitimate mistakes applying their policies, and they sometimes intentionally deviate from their policies due to their editorial judgments about the relative tradeoffs. It is unduly burdensome to try to codify and disclose the infinite number of considerations that drive editorial decisions. Plus, in my article, I explain how the discovery implications of this requirement are troubling, because the state can demand to see all material that the services subjected to editorial review to judge if all the policies have been disclosed, and this conflicts with the McManus case. On the plus side, if the services can comply with this requirement using a catchall TOS disclaimer that they sometimes make editorial decisions that don’t reflect any of the disclosed policies, then this requirement is meaningless.

- “A social media platform must inform each user about any changes to its user rules, terms, and agreements before implementing the changes.” To the extent this provision restates the contract amendment requirement that users must be told before any TOS amendments take effect (see, e.g., Douglas v. Talk America), this provision just codifies what Internet services are already required to do. To the extent that this says or implies that Internet services cannot change their editorial standards without giving users notice in advance, then it raises the same problems associated with the prior bullet point, including publishers’ need for dynamic editorial decision-making, the likelihood that mistakes will be made, and the concerns about overreaching discovery into every aspect of Internet services’ editorial operations. It would also conflict with the panel’s analysis on the 30-day-change restriction.

- “A social media platform must: 1. Provide a mechanism that allows a user to request the number of other individual platform participants who were provided or shown the user’s content or posts. 2. Provide, upon request, a user with the number of other individual platform participants who were provided or shown content or posts.” Many services already provide readership statistics to users. For example, YouTube provides view counts, and Twitter provides “tweet activity” statistics. Other services don’t provide stats; for example, Facebook doesn’t provide users with statistics about views of their personal posts. I’ll note that it may not be a good idea to provide readership statistics because it may motivate users to rack up views rather than add substantively to the discourse. If so, the availability of statistics could change the user community for the worse. I’ll also note that reporting readership statistics involve a lot of judgment calls (like how to account for views by automated scripts or bots), which makes them a ripe area for litigation by users and a messy area for discovery. Unlike Texas’ law, Florida’s law doesn’t ban services from getting users to waive their rights. I think services might make users waive this right in their TOSes to avoid the unwanted consequences.

- “A social media platform that willfully provides free advertising for a candidate must inform the candidate of such in-kind contribution. Posts, content, material, and comments by candidates which are shown on the platform in the same or similar way as other users’ posts, content, material, and comments are not considered free advertising.” This is an odd provision. How many services are providing free adds to political candidates? And if they are, how many are not already telling the candidates? I assume that Internet services will “comply” with this provision by nixing free ads to candidates. Problem solved? (As I’ve indicated before, I think social media services should categorically exit political advertising, paid or free, which would also solve the problem).

The panel did strike down the mandatory explanations provision, calling it “particularly onerous.” The panel explains that it:

is unduly burdensome and likely to chill platforms’ protected speech. The targeted platforms remove millions of posts per day; YouTube alone removed more than a billion comments in a single quarter of 2021. For every one of these actions, the law requires a platform to provide written notice delivered within seven days, including a “thorough rationale” for the decision and a “precise and thorough explanation of how [it] became aware” of the material. This requirement not only imposes potentially significant implementation costs but also exposes platforms to massive liability…Thus, a platform could be slapped with millions, or even billions, of dollars in statutory damages if a Florida court were to determine that it didn’t provide sufficiently “thorough” explanations when removing posts. It is substantially likely that this massive potential liability is “unduly burdensome” and would “chill[] protected speech”—platforms’ exercise of editorial judgment—such that § 501.2041(2)(d) violates platforms’ First Amendment rights.

Two notes about this. First, the Texas bill contains an explanations requirement for all spam filtering decisions (in addition to the parallel explanations requirement for “censoring” user posts). The plaintiffs in the Texas case didn’t challenge the spam filtering provision, but this opinion–assuming the Fifth Circuit is open to listening to other circuits, which it probably won’t–raises obvious constitutional concerns about it.

Second, many privacy laws require, or will require, “explanations” for automated decision-making (including “AI”) or other privacy-related decisions. This opinion doesn’t directly engage those laws, but it casts a constitutional shadow on those rules, especially where the automated decision-making takes place using an algorithm that encodes editorial policies or rules, such as social media algorithms.

A Roundup of My Favorite Lines

This opinion has lots of great quotes. I’ve bolded a number of them above, but to make sure you didn’t miss any highlights, here is a rundown of the lines I thought were most remarkable:

- “social-media platforms aren’t ‘dumb pipes'”

- “the platforms develop particular market niches, foster different sorts of online communities, and promote various values and viewpoints”

- “social-media platforms are not—in the nature of things, so to speak—common carriers”

- “Neither law nor logic recognizes government authority to strip an entity of its First Amendment rights merely by labeling it a common carrier”

- “there’s no legitimate—let alone substantial—governmental interest in leveling the expressive playing field”

- “private actors have a First Amendment right to be “unfair”—which is to say, a right to have and express their own points of view”

- “Even if other channels aren’t as effective as, say, Facebook, the State has no substantial (or even legitimate) interest in restricting platforms’ speech”

- “there is likely no governmental interest sufficient to justify prohibiting a platform from changing its content-moderation policies”

- “there is likely no governmental interest sufficient to justify forcing platforms to show content to users in a ‘sequential or chronological’ order”

Case Citation: NetChoice, LLC v. Attorney General, State of Florida, 2022 WL 1613291 (11th Cir. May 23, 2022)

Case library (see also NetChoice’s library)

- 11th Circuit decision on preliminary injunction

- Florida’s Rule 28(j) disclosure regarding the repeal of the law’s theme park exception (the amendment was done as a jab at Disney, not to correct the constitutional deficiencies of the law)

- Florida’s reply brief

- Amicus briefs in support of NetChoice/CCIA:

- Cato

- CDT

- Chamber of Progress et al.

- Copia Institute

- Chris Cox

- EFF

- Internet Association

- IP Justice

- Knight First Amendment Institute

- First Amendment law professors (I joined this brief)

- RCFP et al

- TechFreedom

- NetChoice/CCIA appellee brief. My blog post.

- Amicus brief from Institute for Free Speech claiming to be in support of neither side, but mostly in support of Florida’s law

- Amicus briefs in support of Florida:

- Florida’s opening brief on appeal. Blog post.

- Florida’s answer to the complaint.

- District court June 30, 2021 opinion enjoining most of the law. Blog post.

- Amicus Brief opposing the preliminary injunction from Leonid Goldstein. Blog post.

- Blog post recapping the Preliminary Injunction Hearing Against Florida’s Social Media Censorship Law

- Plaintiffs’ Reply Brief to Florida’s Opposition. Blog post.

- Florida Opposition to Preliminary Injunction. Blog post.

- Amicus briefs in support of the preliminary injunction

- Blog post on the amicus briefs

- Preliminary injunction brief (if you get an error message downloading one of the files below, hit refresh)

- Blog post on preliminary injunction brief

- Netchoice v. Moody complaint.

- Text of SB 7072. Blog post on the statute.

Pingback: Is Google's Search Engine a "Common Carrier"? (Seriously???)-Ohio ex rel Yost v. Google - Technology & Marketing Law Blog()

Pingback: Supreme Court Restores Injunction Against Texas HB 20!-NetChoice v. Paxton - Technology & Marketing Law Blog()